Human and AI Art

Marisa Tschopp

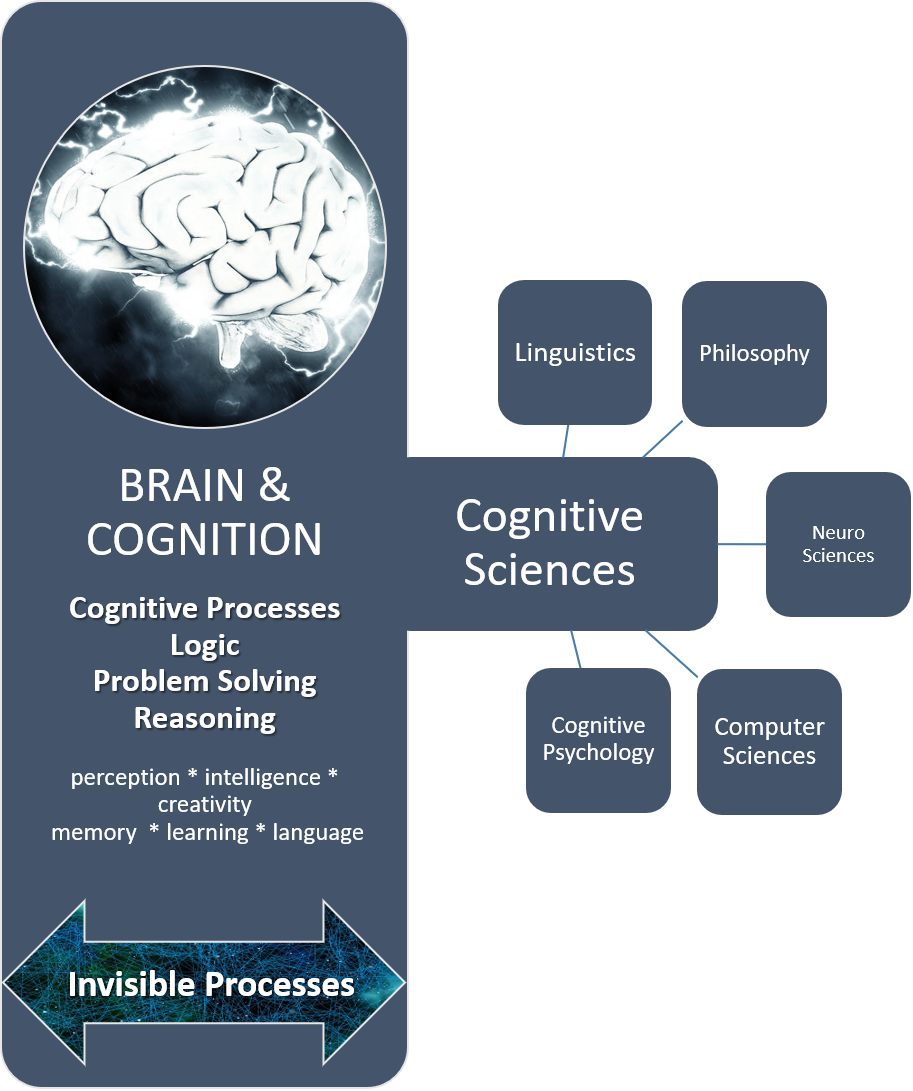

The first chapter of the series The Psychology of Artificial Intelligence, is a review dedicated to the very complex topic of cognition (mental activities) and it analyses the analogies to artificial intelligence. The fascination about the human-machine debate, has been a fundamental part of discourse from science over economics to everyday life: from the steam engine over the calculator to humanoid robots. Cognition comprises numerous sub- and adjacent fields and can be studied from the side of psychology, biology or health sciences as well as philosophy and neuro sciences. This review wants to give an integrative overview of state-of-the art research in the field of cognition and AI. It will touch upon historic developments as well as realistic forecasts, always under the paradigm of science.

Whole study programs are concerned with only one sub-topic of cognitive sciences for many years, like research on the brain or development and usage of speech. There are various research questions, that analyze the interplay of brain, mental processes, behavior and environment. If you look at the biological, neuronal structure of the nervous system, you can answer the question regarding the unique nature of the human species at least in parts. The desire to unveil the reasons and chasms of human behavior, to propose models to predict future behavior, is deeply engrained in human existence. This has already been illustrated in the antique writings by the great philosophers like Aristotle, Seneca or Socrates. While the middle ages were characterized by a decline in logical thought, due to the strong prevalence of religion, rational thinking and science returned to society in the 16th/17th century, later referred to as the epoch of enlightenment. While Aristotle has achieved his results through subjective methods, like introspection and discussion, scientists of the 21rst century have developed objective and systematic methods to generate data and knowledge. Empirical social sciences were born, along with unsolved controversies and dispute over methods.

Despite these controversies, or maybe because of them, significant progress has been achieved: with imaging techniques, like the positron emissions tomography (PET), researchers now have the possibility, to get real (objective) insights into the black box of the human brain, meaning the invisible processes in the nervous system. The basic principle remains the same: there is a stimulus: a car driver sees a red light; and then the respective reaction: the car driver stops the car. Cognitive sciences aim to understand, what cognitive processes lead to the reaction “to stop” with such methods like the PET.

Zimbardo & Gerrig (2007) see artificial intelligence as a domain of the cognitive sciences. The different streams of cognitive sciences, like neuro sciences or linguistics, influence AI research massively, as AI (strong or weak AI likewise) wants to mimic these processes, from speech recognition to the ability to engage in complex conversations. Hence, it is logical, to engage in research about these processes to develop technologies, that execute these rules and response patterns. Here, IBM has performed powerful pioneer work and has heralded a new era of cognitive computing: information processing in form of machine learning though deep learning, a form of AI.

At this point the lack of precise definitions leads to great uncertainties and confusion. Which terms and concepts in what form will prevail, will show in the upcoming years. According to the current AI report published by the Stanford University, it is especially because of these unclarities that AI attains so much attention worldwide.

While philosophy and linguistics as part of the cognitive sciences have a long history from ancient times until now, computational sciences with being about 80 years old, are quite young from the perspective of methodological research. But this lead will soon be caught up with. Computer sciences will have an incontestable position in formal education. Quick information processing and exchange, increasing amount of data and rapid advancement of theories and applications, as well as the emphasis on interdisciplinary orientation of research institutions, will ensure this development. A major downside of this inorganic growth will be the information overload, a problem, which can only be met through extensive meta-analyses in the upcoming years. Within the next five to ten years, clear definitions and differentiation criteria can be expected. Intelligent programs can be of benefit here, to manage the massive amount of information in various formats, such as scientific journals and blogs.

The Stanford University is publishing a research report on AI since 2009 and it explains the main pillars of AI research:

Further, eight domains of implementation are defined:

The complexity lies within the interrelation of societal and technical research questions, as they are deeply interwoven.

One big issue is the “try now, regret later” attitude, which comes along with the huge complexity of the topics. Societal or ethical implications and problems are difficult by nature, plus, resources for these issues are scarce. The societal imperative of growth and fast outcomes, leads to the neglection of society critical subjects and shifts the question about who is responsible into the far future. Insofar, the omnipresent rise-of-the-robots prophecies are quite useful, as they at least indicate that there might be some risks or danger involved. One way in the positive direction is the IEEE organization, a global initiative for ethical standards intelligent and autonomous systems must adhere to. In formal education, ethics do not get quite the attention as needed, yet a significant upswing can be observed nowadays.

But, to what end? What should an AI be capable of anyways? AI should help to solve problems. For example: How can Facebook with only 20.000 security employees ensure the security and integrity of its 1.3 billion users worldwide? A huge, momentous problem, which could be solved with AI technology.

To develop a solution, an investigation of mental processes and components of the human species, which allows them to speak or solve problems, may be quite helpful. Cognitive psychology investigates parallel and serial processes and differentiates between autonomous and controlled processes along the dimension of attention. This review will also take a closer look at problem solving and logical reasoning. A review on linguistics was already published here. The topics memory, intelligence and creativity are also part of cognitive psychology, but will be published in another paper due to the multidimensional layers of these issues.

What mental processes occur, when a car driver sees a red light and stops, is subject of the investigation of cognitive sciences. Especially, because these processes happen in the black box of the human brain, makes this topic so interesting. In the 19th century, F.C. Donder’s experiment to measure the speed of mental processes has set the fundament of cognitive models. With a paper-pencil-test, subjects had to choose regarding a stimulus and/or choice of reaction; this states the underlying rationale for all mental processes: different mental processes take a different amount of time.

Classification of stimulus: Draw a C above all capital letters:

To bE Or not tO Be, tHAt is ThE QueSTion [. WHat…]

Classification of stimulus plus choice of reaction: Draw a V above all capital vowels and a C above all capital consonants:

To bE Or not tO Be, tHAt is ThE QueSTion [. WHat…]

The second task usually takes longer than the first. This form of psychometric testing, leads to the hypothesis, that mental processes can run parallel as well as serial. Depending on how much mental capacity is used, these procedures can measure performance with a sufficient degree of certainty (taking the error rate into account). A fundamental moderating variable are attention processes as they influence processing resources massively.

Resources for navigation, like walking, and language processes for example can run in parallel. If attention is diverted to an interfering incident, for example icy or wet roads, then it is hard to carry on with the conversation. On the other hand, driving a car, which is also a resource for navigation, is much more differentiated and needs more resources because more mental processes are used in parallel (such as steering, traffic, etc.); all processes that run additionally have a negative impact on the mental capacities. This comparison presents a new dimension of mental processes: depending on the degree of attention, the two forms of controlled (much attention) and automated processes (less attention) are differentiated. Walking is an automated process for almost all humans without disabilities, whereas driving a car is very different, depending on the experience of the driver. Hence, automated and controlled processes, do not represent a dichotomous classification, but depend on various experienced interferences.

When there is more than a simple interference, and a target state cannot be reached for some reason, there is a problem. All mental processes focus on the target state, where current information and memory are combined. One form of problem solving is logical thinking or reasoning, where problems are often solved through inductive or deductive reasoning.

Independent of their complexity, all problems have one thing in common: underlying missing pieces of information, which are to find or to complete, to solve a problem. From this initial state, the goal is to move to a target state, by making use of various mental operations. These are the three elements of the problem space: to solve a problem, the boundaries of the initial and target state must be analyzed and defined, to select the needed steps to solve the problem. If the initial and target states are clearly defined, an algorithm can be used, a systematic methodology, which always leads to the right solution if, like solving a combination lock. However, most problems and solutions are often not clearly defined, and resources are scarce, that is why humans additionally make use of heuristics to solve problems, a kind of simplification of thinking.

In research, mental processes were investigated with the method of thinking aloud, where subjects, for example when solving a riddle, had to speak out all their thoughts as detailed as possible. With this verbalization method insights into various processes of the problem space and its complexity are gained. Similarly, Aristotle has investigated, how humans get to a logical, correct conclusion from various statements, in this case premises or assumptions. These processes are defined as deductive reasoning and are represented as syllogisms. Concretely, this means that two premises lead to a conclusion (as in Zimbardo & Gerrig, 2008):

| Premise 1 | ∧ | Premise 2 | ⇒ | Conclusion |

|---|---|---|---|---|

| The restaurant accepts all important credit cards | ∧ | Visa is an important credit card | ⇒ | The restaurant accepts Visa |

Many problems can take the form of syllogisms. However, evaluating these and putting them into real practice requires caution: biased judgements, pragmatism, and cultural differences lead to false conclusions. In deductive reasoning the validity of the premises is in the foreground, whereas in inductive reasoning, conclusions are made based on probability. This probability should always be disclosed by stating words or measures of uncertainty.

To sum up, deductive reason concludes form the general to single evidence, with 100% certainty. Induction concludes from single points, for example data, to the general, where certain premises lead to a conclusion with a given probability. A third way is abductive reasoning, a form of causal logic with a high risk of error. In science theory the various forms of reasoning play an important role and are often main issues of philosophical disputes and long not solved. For example, the renowned epistemologist Karl Popper, rejected inductive reasoning fully and established the principle of falsification. Falsification follows trial-and-error principles, where hypotheses are falsified until the only truth is found out.

Surely, there are many ways to solve a problem. The logic behind logic is complex and it constitutes the supreme discipline of the homo sapiens. Since the invention and usage of tools, like bow and arrow, about 70.000 years ago during the cognitive revolution, and the ability to organize in groups and gossip, the dominance of homo sapiens over other species, like the Neanderthals, began (Harari 2015). Thanks to the scientific revolution these tools became more sophisticated and efficient, which brings us to the present moment: a society in constant technological changes, with all its accomplishments, from the first tabulator around 1900, to the omnipresent digital assistants, like Amazon’s Alexa and sophisticated programs like IBM’s Watson or AlphaGo (Deepmind). In consideration of these historic developments and the further influences of research, the importance of AI for our future will most certainly be incontestable.

The mass of lurid articles and videos regarding the human-machine debate seems to leave an unpleasant aftertaste behind. However, there are various ways to shed light on the dark, based on scientific, objective standards with a steady focus on legitimacy. The cornerstones of such efforts must be based on solid theory, evidence and social relevance. Every person dealing with AI must take one question into account: To what end? The legitimization is yet already given simply to halt the hype and doomsday fantasies by proper reasoning.

One possible systematic comparison may look as followed: The essential elements are (1) stimulus classification, the character of the external stimulus, (2) access system, the system receiving the stimulus (3) the inner system of information processing, and (4) the output system, the format of output delivery (the reaction). Next, an exemplary insight for each category will be given, however, there are many more options to be considered.

This dimension encounters the characterizing the stimulus. This is defined around the five senses: The human species is equipped with five senses, which classify stimuli: sensing, hearing, seeing, smelling, and tasting.

Looking at the example of the touchscreen and speech command, it is clear, that natural communication with a machine is still in its infancy, despite the technical advances: Whereas controlling a phone with a touchscreen has been established so far, voice command is not yet established culturally. A natural interaction with voice control is still to be developed. To be effective, role models and early adopters can act as a catalyst to normalize this process. Furthermore, hot topics in research are visual stimuli and processing. Clearly, drivers of innovation are the opportunities in healthcare, medical diagnostics. Research, development and implementation are quite far ahead here, yet it is still long way to define the role of AI in medical everyday business.

Smell and taste are still quite visionary, but the opportunities are promising and not unrealistic. For example, a mobile end-user device could detect a cold by analyzing the molecules of the human breath; AI could figure out if wine tastes corked or it can detect gas or poison.

In everyday life the access system plays a role as it may influence user experience critically, for example a good microphone for speech input. There are technical differences, that only differentiate fundamentally in humans due to disease or age. Here as well, human physiology served as an example to develop superior access systems. A classic example is comparing the human iris to the lens of a camera to adjust lighting conditions. The human machine comparison led to considerable progress and highly sophisticated technology.

A functional and sophisticated access system is a necessary but not sufficient condition for highly developed machines, no matter if it is a strong or a weak AI. Nobody now would perceive a customary camera as intelligent, because the interesting part is what gives meaning to those pictures; and this is what happens in the brain.

The inner system is the core of the human-machine comparison, as this is where the magic happens. Everything which cannot be describe objectively with data, is open for speculation, discussion and competition. The human-machine debate has been reignited, when IBM’s supercomputer Watson competed against the best Jeopardy! Players and became the invincible, new Jeopardy! Champion. Technological innovations like artificial neural networks as a part of machine learning gained popularity. In short conclusion: Artificial Neural Networks are inspired from the architectural structure of the human brain. Inspired, that is already it. Because research is still far away from revealing the functionality of the human brain.

However, research and economy have soon understand the huge potential of this issue and started to put this vision into practice. 2005 the Human Brain Project (former Blue Brain; EPFL, Lausanne/ Geneva, 2005 – 2018) was established. According to current information, researchers have achieved to reconstruct a small part of a rat brain through artificial neurons. A huge accomplishment at a heavy monetary cost using the most powerful supercomputer currently available, committed to decode the human brain to find cures for diseases like Parkinson for example.

So, the different disciplines stimulate each other, impossible to determine who profits more. Technology, as well as knowledge about the human brain have made tremendous progress. To be clear though, it must not be inferred, that artificially constructed neurons, similarly used with AI, are anywhere close to the capacities of the human neuronal network. On a side note, around 130 researchers work on this pioneering, international project, where there are now able to reconstruct the cortical column of a rat with about 30.000 neurons. In comparison, the human brain consists of about 100.000.000.000 neurons and the energy to hypothetically power the brain would need dozens of supercomputers and cost billions.

Although artificial neural networks are only an abstraction, it must be noted, that this incorporation of machine learning has led to better results when it comes to complex problems in various areas of application such as image- und pattern recognition (other areas are speech and character recognition, early warning systems or robotics). Especially interesting for a very broad audience without technological background, are the results of an AI defeating humans at playing games like chess or go.

Referring to different cognitive processes explained above, research has shown that similar parallel processing as in human brains is nearly impossible, rather serial processing is the current state of research and practice.

With regards to automated versus controlled mental processes the situation is a bit more complicated. There are different perspectives you can consider, but it is unclear which one makes sense or even if it makes sense at all. Controlled processes could be processes, that need a lot of energy (working memory capacity or electricity for example), that is the case if you compare it to human attention. An alternative would be to compare based on technological development. If a process needs a lot of human input, then you cannot put it on the same level as automated, self-learning algorithms. Here a comparison from the learning sciences perspective may bring more fruitful results. We have documented inputs about learning and AI before. From the learning perspective, the discussion around the structural architecture of the brain comes back into play, not only from a physiological but also from a philosophical point of view. The idea of the modular composition remains a hot topic (referring to the Broca’s area) and will remain a topic for ongoing disputes.

Finally, the topic referring to decision making based on heuristics and probability is a critical issue. What are the defined thresholds, who defines them and takes responsibility when it comes to critical results in a highly critical situation, with regards to health or transportation for example. On another note, the definition of certainty thresholds of knowledge questions has much less critical consequences, used with weak AIs in everyday life. In a recent research project lead by scip ag, digital assistants are tested with regards to their cognitive abilities, referred to as one dimension of intelligence. During a test with Amazon’s Alexa, Alexa adds a phrase to the answer which implies an insecurity or that further research is needed, for example “this might answer your question” followed by a given answer. This testimony of uncertainty is not only helpful to get insights into the functionality but also the philosophy and logic of information processing, similarly to inductive reasoning explained above.

Finally, the architecture of the output system can serve as a basis for comparison. Again, there are multiple other options and perspectives, how the for a solution processed information, the target state, can be delivered. With humans the five senses are a relevant dimension. When it comes to interaction, speech, including facial expression and gestures, are most certainly the most important aspect. But also, less visible reactions, for example measuring skin resistance, a classic instrument to measure advertising effectiveness, are applied in research. These measures of cognitive reactions are used to make evidence-based decisions on which motive is most likely to help increase sales: for example, should you use a landscape or a group of people for a beverage advertisement. These and other results from consumer psychology are becoming especially relevant in concrete applications of AI, for example the use of humanoid robots as service assistants or digital assistants like Alexa at home or at work. A multitude of possible research questions are possible, like form, size, color, tone, accent, gender, screen- or screen-less, and many more. This is not solely a design question but also a critical success factor, because one mistake with regards to gender or race discrimination, can cause great harm to the company image and have legal consequences.

Furthermore, the concept of trust may play an important role when designing the output system. Results from the long history of psychological research about perception, judgement, bias, and decision making can make valuable contributions and can serve as models for further development.

Based on the example of the concept of trust, various research designs are possible, where trust can be independent (cause), dependent (effect) as well as moderating variable. An interesting hypothesis could be influence of a specific form of priming on trust building; or which variables of the output system lead to reactance. Culture, gender, education and many more important context variables must be considered.

Creativity and opportunism within the human-machine debate knows no limits. Not only research but also the public are interested in this promising field. Realism and constructive skepticism must be exercised because the human mind is far away from decoded, and it would be very dangerous to draw conclusion upon something so vague. But what can be concluded then, because this comparison is no non-sense. To what end can we benefit from this question? The Human Brain Project exhibits a good example for legitimization. Transferred to psychological research, fruitful symbiosis may be created as the human organism and AI are conceptionally connected in many elements and they can benefit from each other in terms of content or methods. Here is an example how a symbiosis of AI research and psychology could look like.

Research Design: The hypothesis is, that a specific form of priming influences trust building towards an AI. As noted, here are psychological concepts transferred to AI research.

Simple Form: IV → DV: If positive priming [IV: Independent Variable] → Then positive Trust-building [DV: Dependent Variable]

With intervening, moderating variables [IV, MV]: These variables should be measured to take third variable influences into account.

IV [Priming] → MV [Attitude] ↔ MV [Age] → DV [Trust]

In sum, you cannot get around the human-machine debate, whether it is for concrete applications or generating new knowledge. On the surface it is logical, that computers should mimic the logic of the supreme humans, to make them as efficient as possible. That is why cognitive processes were investigated, systematized and recreated. If this is in part an embodiment of human hubris, might be interesting to discuss. Because more often a great number of empirical studies have shown that the supreme humans not at all think logically. Aren’t distorted perception, strong feelings, like hate, fear or love, and intuition much stronger driving forces in thoughts, decision making and behavior?

Everything which seems to be rational, meaning that there are clear rules and sound probability calculation, is usually done much better by a machine, which makes the machine an ally in an idealized world view. However, humanity has been driven into a state of dependency where no way back is foreseen.

Like the usage of a calculator in school had to be discussed, the role of weak AI in formal education must be evaluated as well as the role of learning content, finding and evaluation information. Responsibility and rights must be re-defined; creativity, critical thinking and moral will receive a new status in society.

In conclusion, this topic is not only for sensationalism or publicity. A clear benefit from the symbiosis of thoughts and methodologies has been proven, under the premise of adhering to the standards of science. As Karl Popper formulated it: light must be shed on the dark, trial-and-error is the name of the game. If the black top hat will show, where and in what form, this is still far away. However, the first step is taken: recognizing the existence of the black top hat. It is a fact that computer sciences as well as neuro sciences or psychology have achieved tremendous advances through this interdisciplinarity and we are still at the very beginning. Most positive or negative ideas are still very visionary and far away from every day implementation. The self-driving car is technically quite far and applicable soon and it is proven that human driving abilities are clearly exceeded. However, humans with their trust system and their own evolution are rather lethargic and skeptical, which is, for all intents and purposes, quite a good thing.

Let’s just assume, the Human Brain Project achieves its first, big goal, the complex reconstruction of a rat’s brain. The next step, in distant reality, would be the reconstruction of the human brain, with all its neurological functions. Even if this construct functions perfectly physiologically, one questions remains: what brings life into these cells? The greatest secret of the brain is to create consciousness through the neuronal activities in the nervous system. Everything else is simply lifeless matter. This almost spherical topic is the focus of the next issue of the series “Psychology of Artificial Intelligence”: AI and Consciousness.

References

Burks, A. (1946). Peirce’s Theory of Abduction. Philisophy of Sciences, 301-306.

Ang, C., et al. (2018). Mark Zuckerberg Ends Two Days of Testimony on Capitol Hill, New York Times: https://www.nytimes.com/2018/04/11/us/politics/zuckerberg-facebook-cambridge-analytica.html

Cognitive Computing Consortium (2018). https://cognitivecomputingconsortium.com/definition-of-cognitive-computing/

Deep Mind / AlphaGo (2018). https://deepmind.com/research/alphago/

Furber, F. (2016). Artificial Intelligence vs The Human Brain, Huffington Post: https://www.huffingtonpost.co.uk/steve-furber/artificial-intelligence-vs-the-human-brain_b_10434988.html

Harari, Y. N. (2015) Sapiens. A Brief History of Humankind. New York: HarperCollings Publisher

IBM Watson (2018). https://www.ibm.com/watson/

IEEE Standards, Code of Ethics (2017). The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. http://standards. ieee.org/develop/indconn/ec/autonomous_ systems.htm

Kelly, J.E. (2015). Computing, cognition and the future of knowing How humans and machines are forging a new age of understanding (White Paper, IBM Research)

Kroeber-Riel, Werner, Weinberg, P. Gröppel-Klein, A. (2009). Konsumentenverhalten. München: Vahlen

Popper, K.R. (1968). Was ist Dialektik? In: Ernst Topitsch (Hrsg.) : Logik der Sozialwissenschaften Bd., S. 262–290

Ruef, M. (2017). Artificial Intelligence – A Model for Humanity, SCIP AG: https://www.scip.ch/?labs.20180104

Tschopp, M. & Ruef, M. (2017). Development of an Artificial Intelligent Quotient. https://www.iaiqs.org/

Pinker, S. (2018). Enlightenment Now: a Manifesto for Science, Reason, Humanism, and Progress. New York: ALLEN LANE

Chatham, C. (2007). Science Blogs, 10 important differences between brains and computers: http://scienceblogs.com/developingintelligence/2007/03/27/why-the-brain-is-not-like-a-co/

Stone, P. et al. (2016). Artificial Intelligence and Life in 2030. One Hundred Year Study on Artificial Intelligence: Report of the 2015-2016 Study Panel, Stanford University, Stanford, CA

Zimbardo, P., Gerrig, R., & Graf, R. (2008). Psychologie. München: Pearson Education.

Our experts will get in contact with you!

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Our experts will get in contact with you!