Transition to OpenSearch

Rocco Gagliardi

We tried to import data in MariaDB and Elasticsearch, but both solutions were slow. MariaDB had better/flexible search performance in the short term, but it does not scale very well. Elastic scales well, but requires more code to keep data under control and more hardware. In addition, the search engine was just a PoC, so we didn’t want go big in hardware.

sed.We recycled an old Shuttle/I5/8GB, changed the HD with a new SSD (Samsung 850Pro, 1TB) and installed Debian.

After the hardware preparation and some tests, we decided to go for a filesystem index:

We prepared some Python and shell scripts to deal with the data.

We pre-parsed the files with specialized tools or some sed scripts, until we get a reasonable files base, as described in the preceding chapter. Then we applied the following pattern:

file (f)email (e)"e, hash(f)" >> left(e,3)__.idxIn two days, all files were scanned and indexed. After that, it was possible to make a search for records containing a specific email address in a few seconds.

Attention was placed in the choice of the hashing algorithm; it was possible to save some Gb of redundant information. It was also important to balance the regular expression searching for mails: not too easy nor too complex.

| Parameter | Value |

|---|---|

| Number of files to analyze | 534 |

| Number of records to analyze | 1’895’800’000 |

| Total size of files | 222Gb |

| Parameter | Value |

|---|---|

| Number of chunks produced | 189’588 |

| Size of chunks produced | 222Gb |

| Number of indices produced | 63’242 |

| Size of indices produced | 42Gb |

| Number of extracted email addresses | 757’036’765 |

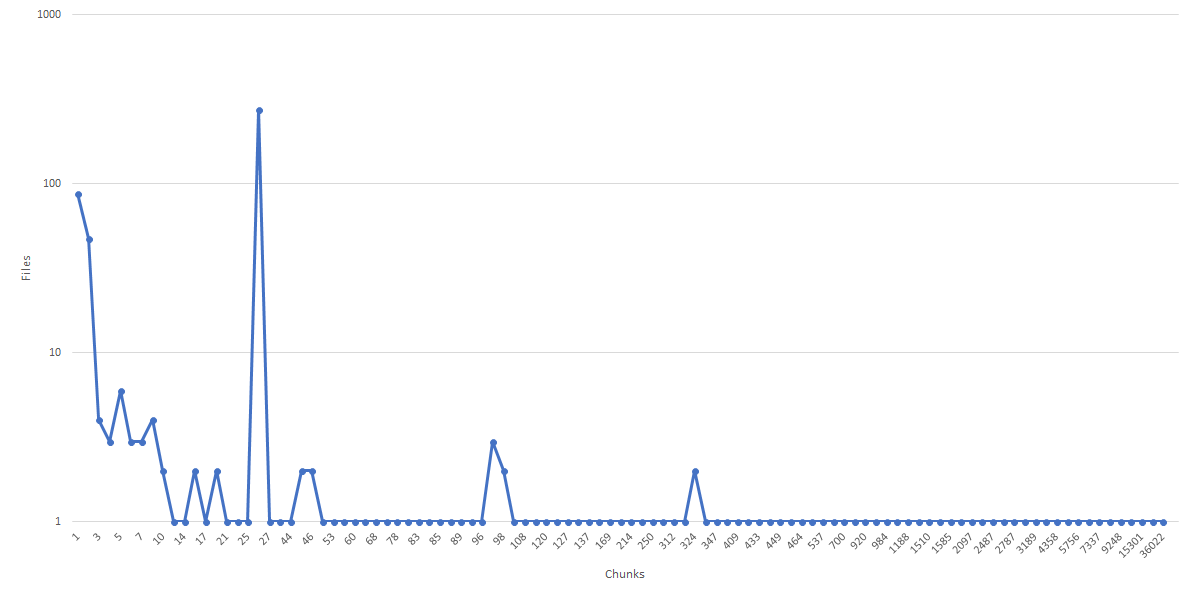

The chart shows on the x-axis the number of chunks and on the y-axis the number of files chunked by c. It shows how the original files have been chunked to flatten the access time.

| Parameter | Value |

|---|---|

| Preparation | ca. 1 day |

| Indexing | ca. 1 day |

| Speed of indexing by filesystem | ca. 9’000 records/second |

| Speed of indexing by MariaDB | ca. 350 records/second |

| Speed of indexing by Elastic | ca. 500 records/second |

| Parameter | Value |

|---|---|

| Search time without indexing | ca. 960s (16m) |

| Search time with indices, best-case | 0.005s |

| Search time with indices, worst-case | 66s |

| Number of indexed email addresses with access time < 5s | 559’356’992 (75%) |

| Number of indexed email addresses with access time between 5s and 10s | 110’331’635 (15%) |

| Number of indexed email addresses with access time > 10s | 77’810’353 (10%) |

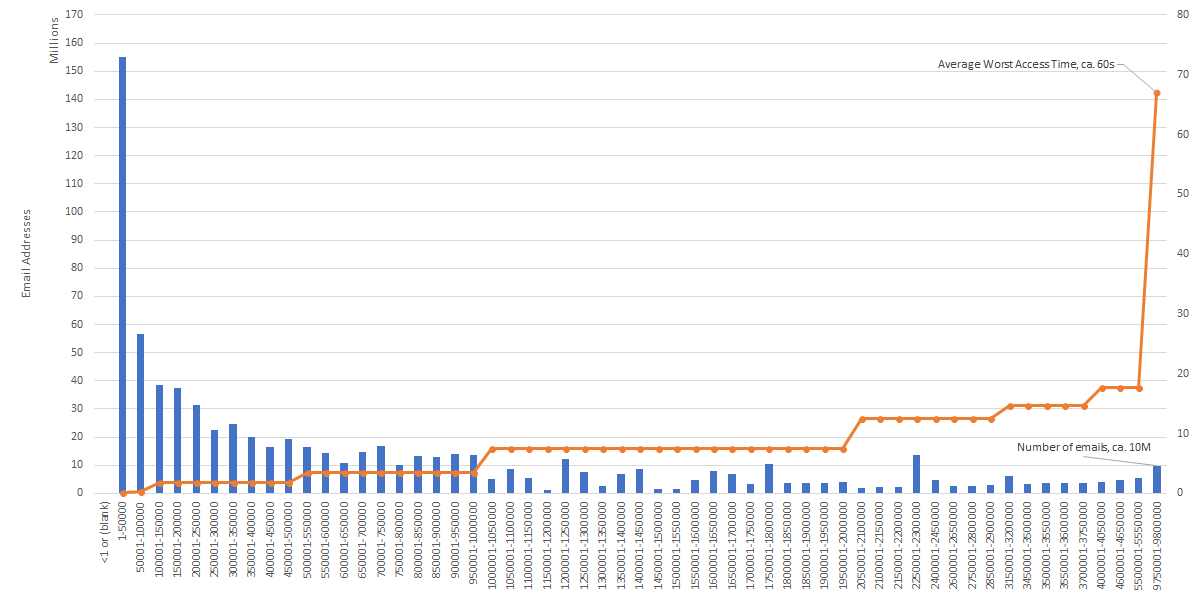

The following chart shows on the x-axis the indices in 50K cardinality groups (cg), on the left y-axis the number of email addresses in the cg, and on the right y-axis the worst access time [seconds] for the gc.

Circa 90% of the email addresses can be found within the 10s range, but the remaining 10% has an high access time. Based on statistics, a second indexing round has reindexed the top 10 indices by 4 chars; as result, the access time has been flattened to max 10s.

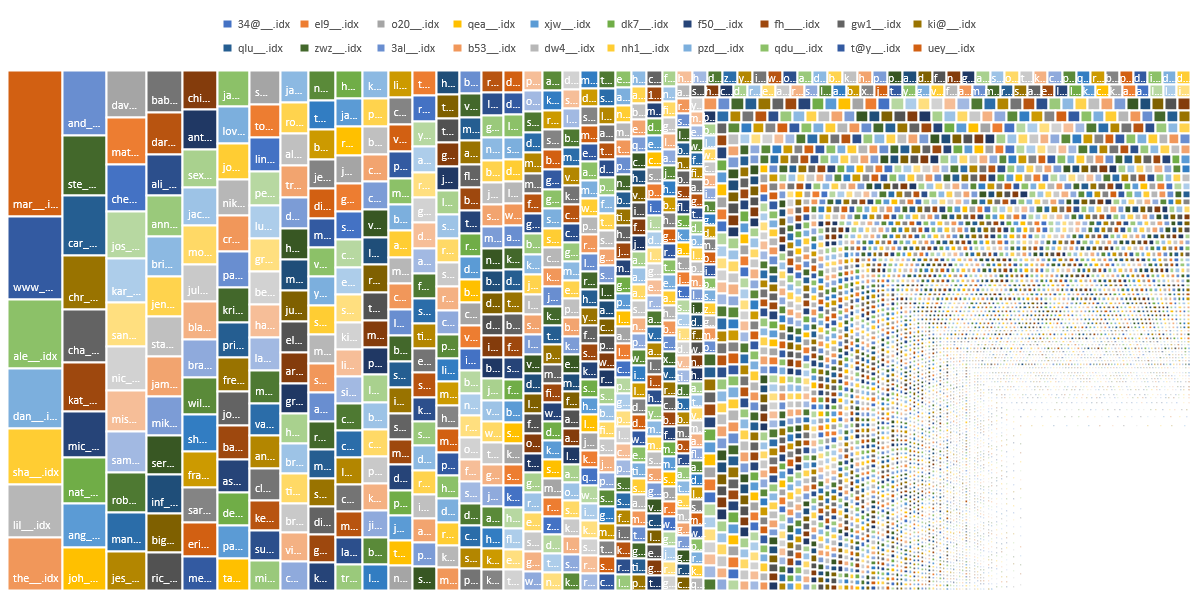

Indexing by the leftmost 3 chars, creates indices with different sizes, depending on how many email addresses starts with the 3 chars. The top 3 indices have millions of email addresses:

| Indices | Number of mail addresses |

|---|---|

| mar__.idx | 9’763’226 |

| www__.idx | 5’548’694 |

| ale__.idx | 4’605’329 |

We note that the majority of email addresses extracted starts with mar, at the second place www, which means that there are many “service” email addresses.

The following chart shows a treemap of the indices sizes.

Using dedicated tools, we were able to decrease the query time by many order of magnitude. Depending on the goals, instead of use hardware or big and complex tools, it is better to create ad-hoc solution and use the power of the OS tools.

Our experts will get in contact with you!

Rocco Gagliardi

Rocco Gagliardi

Rocco Gagliardi

Rocco Gagliardi

Our experts will get in contact with you!