Specific Criticism of CVSS4

Marc Ruef

This is how you measure the IQ of an AI

The interest in artificial intelligence seems to be interminable. According to the 2017 AI Index Report (based on the Stanford 100-year Study on AI), over fifteen thousand papers across disciplines have been published in academia only. In addition, an innumerable corpus of articles online and in print add up to the massive spread of information about AI – from rock-solid science to trivial or superficial news, that simply wants to cause a sensation (Stone, 2016).

While more people than ever are now confronted with artificial intelligence (intentionally or not), it is still hard to find a common ground of understanding of the concept. The complexity lies within the term itself by adopting the word intelligence as technical, procedural capabilities of machines. It has inherited a myriad of challenges from the long history of psychological intelligence research. From methodological problems (validity, reliability, etc.) to serious allegations of racial sorting by IQ Tests during the Nazi period (Zimbardo, Gerrig & Graf, 2008).

In the present report, the topic of intelligence of man and machine will be approached from different perspectives.

According to Russel and Norvig (2012), four main categories of defining artificial intelligence have evolved: (1) Human thinking and (2) behavior, (3) rational thinking and (4) acting. Ray Kurzweil defines it as “the art of creating machines that perform functions that, when performed by humans, require intelligence” (Russel & Norvig, 2012, p. 23). The processing of natural language, providing the opportunity to engage in human conversation which requires intelligence, is part of the category (2) human behavior. With regards to the scope of this paper, it is unnecessary to lean to deep into a philosophical discussion.

A major problem is the lack of definitions of speech operated systems and various names or labels which have been used, which complicates systematic literature search. Nouns to describe these systems are assistant, agent, AI, or the suffix -bot (referred to as a chatbot), extended by one to three adjectives such as intelligent, virtual, voice, mobile, digital, conversational, personal, or chat depending on the context. The variety of names and lack of definitions complicates research and comparability, especially when it comes to evaluation (Jian, 2015; Weston, 2015). It is assumed here that voice-controlled conversational AIs such as Siri or Alexa are classified as socalled weak AI systems and assigned to the field of Natural Language Understanding and Natural Language Processing (Russel & Norvig, 2012). For a detailed further discussion of the definition of artificial intelligence beyond the nomenclature of Conversational AI, see Bryson (2019) and Wang (2019).

According to Hernandez-Orallo (2016), evaluation is the basis of all progress. Hence, it is a critical part for AI research and practice to not only evaluate their products and methods but further to discuss and compare evaluation practices per se.

Researchers, professionals, and others have undertaken attempts to measure and compare conversational AIs, predominantly with conversational AIs like for example Siri (as a part of Apple’s operating system) or Google Now (developed by Google) or also general search engines. Such tests have various perspectives. Between or within conversational AIs, between humans and conversational AIs comparing artificial with human intelligence.

The landscape of testing or evaluating AI-powered systems is quite heterogeneous, which complicates endeavors to take a meta-analytic approach. They differ as follows:

Feng and Shi (2014) propose a concept of an Internet IQ, by using a test question bank (2014 Intelligence Scale). Seven Search Engines, such as Google or Bing, were tested as well as 20 children of different ages. Questions in the test aim at common knowledge, translation, calculating, ranking tasks or creating and selecting information. These questions mirror the procedures of psychometric IQ tests. One category is the ability to grasp the selection, which contains questions such as:

Please select a different one from snake, tree, tiger, dog and rabbit.

Please select a different one from the earth, Mars, Venus, Mercury and the sun.

Among expression via characters and sound, the ability to answer via pictures is measured, for e.g.:

Input the character string “How much is 1 plus 1, please answer via pictures”, check the testing search engine whether can express the answer via pictures or not

However, there are some categories missing, which are essential to intelligence, such as for example memory. Furthermore, a dichotomous right and wrong evaluation might not be sufficient. In a follow-up project still in progress, this research group is conducting intelligence tests with conversational AIs, such as Siri and Google, and propose a standard intelligence model which can be used for humans and machines. Their theoretical model comprises four major dimensions: acquire, master, create knowledge and give feedback (Liu, Shi & Liu, 2017)

A multilevel evaluation system including a memory component is proposed by Weston et al. (2015) focusing on methods to measure progress in machine learning, especially reading comprehension via question and answering batteries. Among others, one aim is to discover flaws in any language operated systems and improve it. 20 tasks were developed, like yes/no questions, time reasoning, or tests for conference, where a pronoun refers to multiple actors:

Daniel and Sandra journeyed to the office. Then they went to the garden. Sandra and John travelled to the kitchen. After that they moved to the hallway. Where is Daniel? ⇒ Answer: garden

This ongoing project by Facebook AI Research is directed towards automatic text understanding and reasoning and is available on Github (the bAbl project). The datasets are not really applicable to publicly available voice-assistants, such as Microsoft’s Cortana, for example, as the complexity is too high.

To measure Cortana’s system performance, Sarikaya et al. (2016) report a set of experiments with regards to the following, chosen tasks or domains: Alarm, Calendar, Communication, Device Setting, Document, Entertainment, Local, Reminder, Weather, and Web. The evaluation was implemented on three levels: natural language understanding, hypothesis ranking and selection and further, end-to-end system accuracy (E2E). E2E task success seems to be an interesting and promising approach, which uses human judgment to measure success and satisfaction with the interaction. An Example task is delete my 6 a.m. alarm in the Alarm Domain.

One key challenge, especially with regards to personal conversational AIs, are the expectations of users: “From a user perspective, the high-level requirement is that a PDA should understand everything and be able to do almost anything, whereas developers aim to match or exceed user expectations but at an acceptable cost” (Sarikaya, 2016, p. 391).

To solve these challenges, Jian et al. (2015) propose another, automated approach to testing intelligent assistants by evaluating user experience as a measurement of success. Their model aims to predict user satisfaction, based on user interaction patterns. Jian et al. focus on intelligent assistants (Siri, Alexa for example), to determine three functionalities: Dialogue (voice commands), web search using voice input and Chat (chat for user entertainment). In their study, pro-active suggestions were not considered. In their user study, 60 participants tested Cortana, Microsoft’s intelligent assistant, based on their pre-defined questions in a mixed-methods design, including questions such as How well did Cortana recognize what you said? or How well did Cortana understand your intent?. Specifically, interesting, based on previous work, are the defined actions of intelligent assistants, serving as a good indicator of what an intelligent agent should be capable of: Executing a task, confirming to execute, asking for more information, providing options, perform a web search, report a system error, no action (returning to interface). Their functional approach is very useful when it comes to user experience and development. From an interdisciplinary perspective, an overall, integrated framework of intelligence and cognitive processes is missing in this study. It rather focuses on testing calendar or call-functions of the phone.

A variety of research questions have been addressed in the field of testing and evaluation, but the uncertainty of what an AI is actually capable of remains, especially in the public, not to mention the fact that AI per se cannot yet be clearly defined. This study wants to approach testing from an interdisciplinary, behavioral perspective, focusing on conversational AIs. What can digital assistans actually do?

Our research makes contribtions to four areas: First, we examine theories and knowledge about digital assitants across disciplines in academia as well as in practice, meaning the greater public. Hence, this study aims to expand knowledge in applied psychology, human-machine-interaction, computer science, and technology management. Secondly, we want to expand current literature by adapting a psychometric, behavioral, end-to-end user approach. Third, our results can be used in a threefold manner: from a developer perspective (test and improve products), from a business perspective (as a benchmark), and from a rather academic perspective (tracking development over time). Forth, we want to discuss the usefulness or necessety of officially recognized standards.

The research project A-IQ, as a measurement of performance, is part of an overall concept that investigates the role of trust in artificial intelligence. It will be discussed whether this idea has an influence as a tool to promote confidence in applied AI by measuring performance (see Lee & See, 2004). Furthermore, follow-up projects will examine which differences arise in the human assessment of skills in contrast to the actual skills and what influence this has on acceptance and trust (for trust indicators such as process and purpose and the provider, see Hengstler et al., 2015).

Consequently, to reach out for such common ground, artificial intelligence research inevitably requires an interdisciplinary approach (Stone, 2016; Nilsson, 2010). The present study primarily focuses on the perspectives from psychological and computer science, also taking economic considerations into account.

However, all test results whether in research or in business for entertainment or product tests (among many, see for example Dunn, 2017), must be read with caution and skepticism. Often there is a lack of profound conceptualization or operationalization of intelligence. For example, not disclosing the methodology hinders reproduction and valid judgment. Considering scientifically valid human intelligence tests, they are always adapted to specific target groups depending on age, culture, language or disabilities. Hence, it is obviously non-sensical to just use any valid IQ test, such as the Wechsler Intelligence Scale, for testing artificial intelligence abilities.

To avoid statements such as Siri’s IQ is only 30 or Siri has the IQ of a 5-year-old, it is necessary to rethink the way in which artificial intelligence is measured and situated into the appropriate context. Human intelligence testing has been a very controversial topic and has undergone dramatic changes in history and therefore is a topic not to be taken lightly (Zimbardo, Gerrig & Graf 2008, Kaufman & Sternberg 2010).

Our research project aims to understand, measure, compare and track changes of conversational AI capabilities following academic standards. Therefore, it is important not to approach testing methodology by taking human intelligence tests and apply them to an AI. To make valid statements about a conversational AI’s capabilities, our research proclaims that an intelligence test for conversational AI must be created from scratch, independent of the technical environment and yet, it should be comparable with human capabilities to some extent. Personal conversational AIs are designed to help, support and assist humans in various tasks with varying complexity. We believe that a natural handling and interaction through speech is vital to benefit largely from the opportunities that human machine interaction have to offer. The rationale for adapting human intelligence tests to machines is to improve this interaction. However, one has to bear in mind, that human intelligence tests are a measure of innate, stable mental capabilities, whereas the A-IQ serves as a measurement of the status-quo and is subject to incremental, rapid growth.

This research also wants to provoke further discussion about how machines and humans cope with problems. How can a weak AI system, as with Siri or Alexa, benefit from the various forms of human reasoning and problem solving, which are in the center of human intelligence tests.

To understand artificial intelligence from an interdisciplinary perspective the corpus of psychological academic literature concerning human intelligence was confronted. Prerequisite and therefore the first step for the development of an A-IQ test is to derive a concept of intelligence. This framework is understood as a system of abilities to understand ideas (questions, commands for example) in a specific environment (e.g. put information into context) and to learn from experiences. This includes processing information as an experience (for example, something that has been learned beforehand) and engage in reasoning to solve problems (e.g. to answer questions or solve tasks). This framework specifically adapted to the AI context.

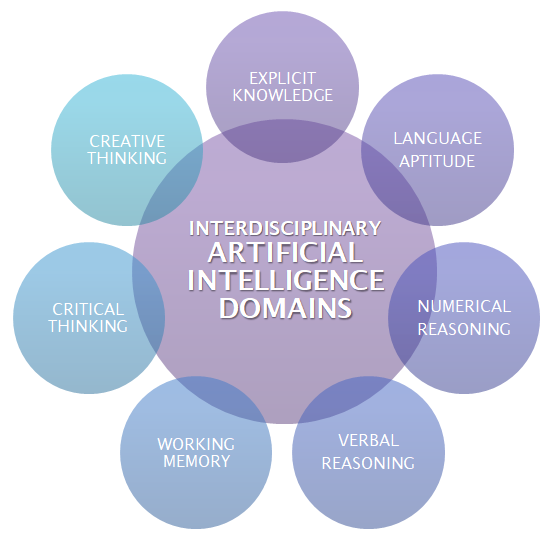

The following artificial intelligence domains represent this system of abilities. The categories were chosen based on recognized intelligence theories by Cattel & Horn, Kaufmann & Sternberg, as well as overlapping areas to the theories stated by Terman, Gardner, Guilford (Zimbardo, Gerrig & Graf 2008, Kaufman & Sternberg 2010).

One major factor was the consideration whether the domain is relevant to the current AI test environment. For this reason, mosaic tests for visual or spatial ability were excluded, but not for good as progress in computer visualization is remarkable and including these abilities in the future can be expected. Also, questions related to intra- and interpersonal intelligence (concepts like emotional intelligence or empathy) have no relevance in this study (Liu & Shi 2017). If these concepts become relevant in the future is questionable and viewed with great criticism.

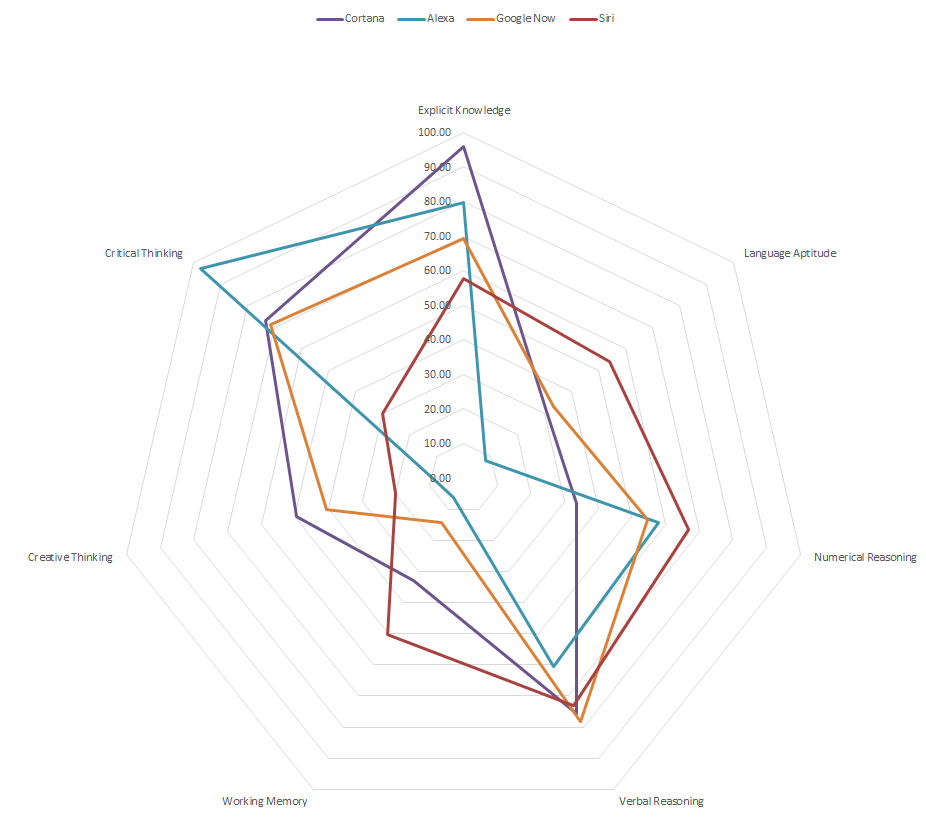

The AI domains aim to measure specific abilities, which all contribute individually with varying significance to the overall concept of interdisciplinary artificial intelligence. The framework integrates seven categories: Explicit Knowledge, Language Aptitude, Numerical and Verbal Reasoning, Working Memory, Creative and Critical Thinking as explained in the following table (Zimbardo, Gerrig & Graf 2008, Kaufman & Sternberg 2010).

| AI Domains | Description |

|---|---|

| Explicit Knowledge | Know What in opposite to Know-How: comparable to information or data found in books or documents; information which is readily available and can be transmitted to others through speech or text, like lexical knowledge. |

| Language Aptitude | Measures the ability to perceive or detect a language, to understand the content and answer the question in the same language. It measures translation abilities for medium difficult sentences (not only single words, which is seen as a prerequisite) as well as the flexibility to switch between languages. |

| Numerical Reasoning | Applying numerical facts to solve stated problems in opposite to mathematical, calculating skills. It shows the ability to analyze and draw logical conclusions based on numerical data. |

| Verbal Reasoning | Understanding and handle words to create meaning and draw logical conclusions out of a given content. Concepts are not framed in numbers but in words with a specific or ambiguous meaning. |

| Working Memory | Evaluates the ability to hold information available for a certain amount of time for processing. Evaluates the capacity to remember and retrieve random information, to repeat information and to answer questions based on prior conversation. The ability to understand the context temporarily in opposite to a one way, not coherent question-answer rhythm. |

| Critical Thinking | The ability to define and analyze a problem and to formulate counter questions adequately to get to a better solution; Over-simplification is avoided and various interpretations of the questions as well as answer-uncertainty is tolerated. |

| Creative Thinking | Also called divergent thinking, as a part of intelligence. Creativity operationalized as divergent thought process is the ability to solve problems through generating multiple solutions. No correct answer, the answer is open-ended and judged qualitatively. |

The following section describes the different steps of the study. First, the framework of artificial intelligence domains was developed. Second, the various domains were operationalized through the development of the Interdisciplinary Artificial Intelligence Quotient Scale, hereby referred to as the iAIQs Scale. Several pilot tests were executed to review the items. Last, A-IQ tests were executed with commonly known conversational AIs, as well as with two humans and one less developed AI for internal comparison reasons. The results are represented as key performance indicators, on overall and domain level, and the actual A-IQ.

The iAIQs Scale mirrors classic intelligence testing procedures from academically constructed, psychometric IQ tests.

The questionnaire consists of 62 items assigned to the seven artificial intelligence domains. The domains are operationalized by four to maximum 28 items. The domains Explicit Knowledge, Verbal and Numerical Reasoning are adapted from the Wechsler Adult Intelligence Scale (Sternberg 1994). The Creative Thinking domain is based on the Torrance Test of Creative Thinking (Ball and Torrance 1984). Working Memory, Critical Thinking and Language Aptitude are developed by the researchers of this project. As an example, this is how Working Memory was operationalized, defined as the capacity to temporarily hold information available for processing. Therefore, question batteries were created, which include at least one follow-up question to a non-evaluated starting question. The follow-up questions have different foci, for example, the follow-up question refers to the first question or the follow-up question refers to the first answer (change of subject and object). Another scenario is where the fourth follow-up question refers to the subject of the third follow-up question.

Critical Thinking domain, for example, was operationalized using homonyms. Therefore, the conversational AI needs to understand that one word, for example a crane can be a machine or a bird, this means there are multiple solutions and the task measures to what extent the AI is able to evaluate and examine question. The following tables give examples about how the domains were operationalized.

| AI Domain | Operationalization |

|---|---|

| Explicit Knowledge | Knowledge question with various levels of difficulty: How many kidneys does a normal person have? |

| Language Aptitude | Translation of whole sentences, detection of languages: Translate into German: The raven is bird, that likes to fly high up in the sky / What language is the word “l’amour”? |

| Numerical Reasoning | Handling numbers with words, calculation with differing units: I paid 7 dollars, how much is left over from my 20-dollar bill? / How much is 10% of 10 shoes? |

| Verbal Reasoning | Word and phrase definitions, word classification, multiple choice question, spelling, anagrams: What does the saying “the early bird catches the worm” mean? / Build a word from the letters K O R F |

| Working Memory | Remember and retrieval, questions/calculation without a subject, referring to prior content: Repeat the numbers 658 backwards / What is 3 times 2? (unweighted answer) Plus 6? Minus 3? (tbc) / What is the capital of France? (unweighted answer) How many people live there? (tbc) |

| Critical Thinking | Handling homonyms, why questions: What is a trunk? / Why is the sky blue? |

| Divergent Thinking | Unusual uses test, hypothesizing: Name all uses for a brick you can think of! / What if people no longer had to sleep? |

For this research purposes, results are evaluated and represented through a database. Unique in this study is the elaborated evaluation through various answer categories, for example: Knowledge versus understanding. This means the answer can be right, but understanding is wrong and vice versa one might understand the concept, but the answer is wrong. Each answer category has several subcategories, each assigned an individual score, to indicate an even more detailed and accurate result.

Repeat for example, has four subcategories: No repeat, repeat same question, repeat with modification and more than three repeats. Pilot tests have shown that this was necessary to ensure an appropriate rating, when only slight modification was needed to get to the right answer or to determine at what point the question is failed (instead of a 30 second time out for example).

| Answer Evaluation | Description |

|---|---|

| Repeat | This rating shows weather the wording or restating the question influences the outcome (slight modification) and ensures that quality differences between the AIs are represented. Inaccurate voice-to-text execution does not count as a repeat, unless it is a clear misunderstanding. |

| Knowledge | The answer of the question demonstrates the objective correctness of a question/ command. It measures the accuracy of an answer without interpretations of the “black box” (black box is the unseen processes within a system). |

| Understanding | This rating constitutes whether the AI has understood the question in context and logical manner, versus just coincidently displaying the correct answer (a typical effect of simple pattern-matching based systems with fuzzy logic). |

| Delivery | The underlying assumption here is that from the human-agent perspective speech plus additional information is the most desirable mode of delivery. The effort of obtaining an answer to a question should be as little as possible. |

The combination of weighted questions and multilevel answer evaluation results in an overall key performance indicator (KPI). Each item yields a specific score. Each category consists of a minimum of four items. The average of the item scores reveals the score of the respective intelligence domain. The average of the intelligence domain scores results in the KPI:

question_qualityn = importancen × average(repeatn, knowledgen, understandingn, deliveryn)

From the key performance indicator, the actual A-IQ is derived. Goal is to have a conceptual value similar to the calculation of current IQ formulation. It is calculated as the “deviation IQ” derived from the median performance of the tested devices. Basis of the calculation are the core products only (based on Zimbardo, Gerrig and Graf 2008).

kpi_productn = average(question_quality1, question_quality2, … question_qualityn)

aiq_productn = (100 ÷ (sum(kpi_product1, kpi_product2, … kpi_productn) ÷ count(products))) × kpi_productn

This model allows inter-category comparison as well as overall performance comparison. The overall goal is to state a valid conclusion about an AI’s intelligent quotient as a reliable measurement of general ability. Furthermore, its progressive development in overall performance or within specific categories can be tracked over time. The KPI mechanism is fully backward-compatible which allows the comparison of earlier tests with modified structure.

Several tests (and pilot tests) were constructed and executed with commonly known, well-used conversational AIs which are publicly available. The investigators personally asked the questions to the end-device, like an iphone or the Amazon Echo Show. Answers were evaluated according to the evaluation criteria simultaneously in a separate file. The core products of these tests were Google Now (by Google), Siri (by Apple), Alexa (by Amazon) and Cortana (by Microsoft). The tests were conducted on state-of-the art devices with the latest firmware installed. To ensure fairness and objective comparison of general ability, tests are constructed independent of the respective eco-system, meaning that no additional apps are installed or used, only the core system is target of the test.

Furthermore, two human participants (female, 29 years and male, 36 years) were added to the test group to provide comparison and context. Additionally, Cleverbot, a well-known public chatbot, was also added. With these additional tests we can discuss an overall validity and applicability of the test. The final analysis focuses on the core products.

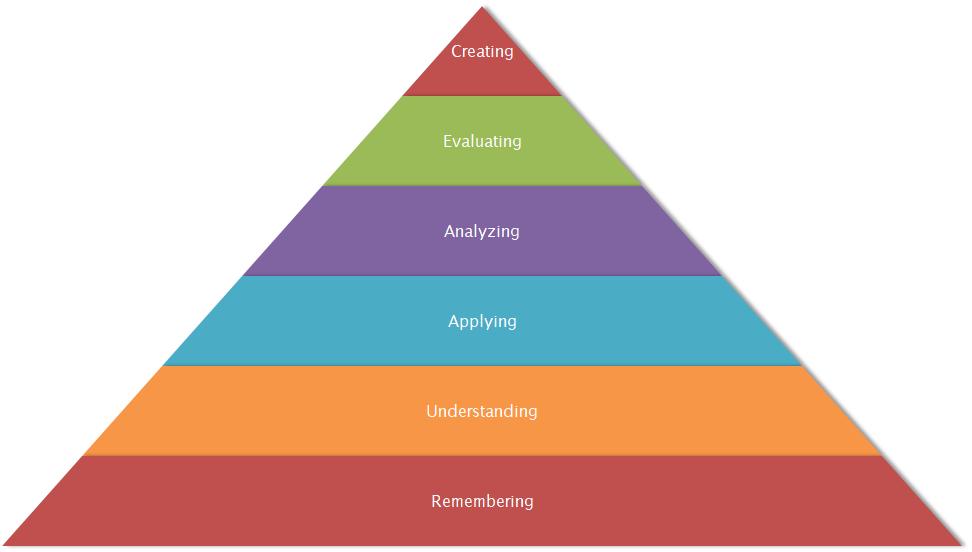

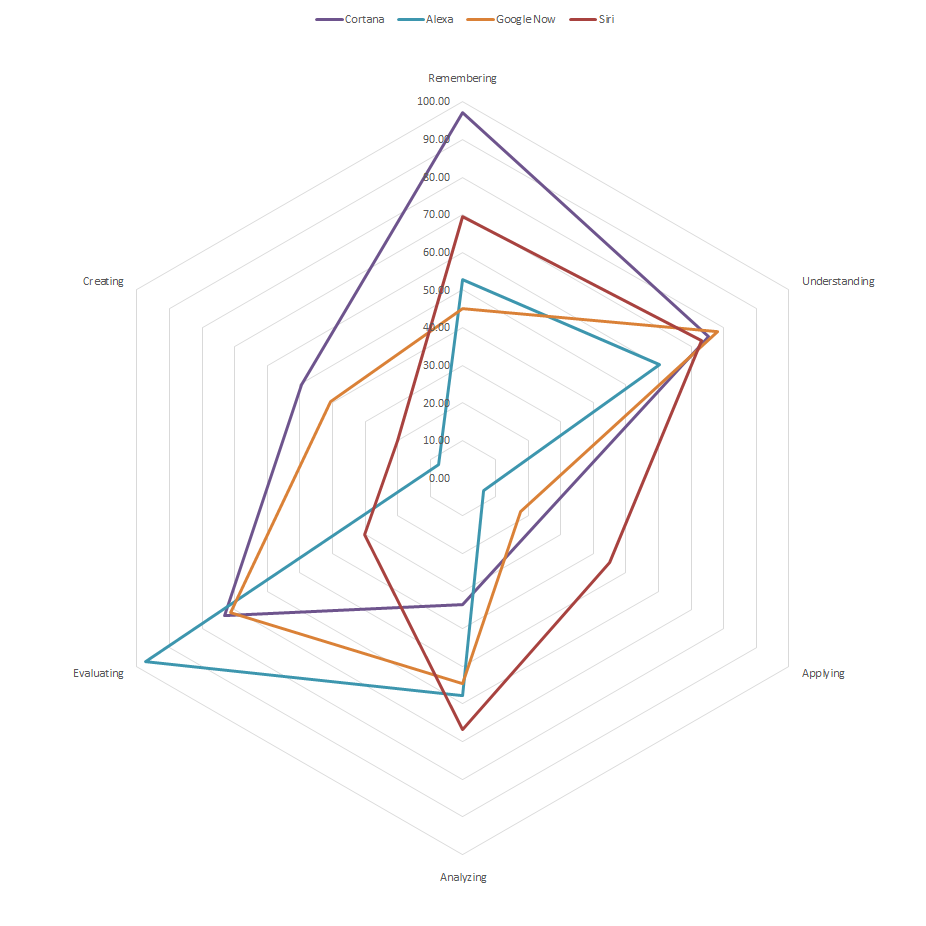

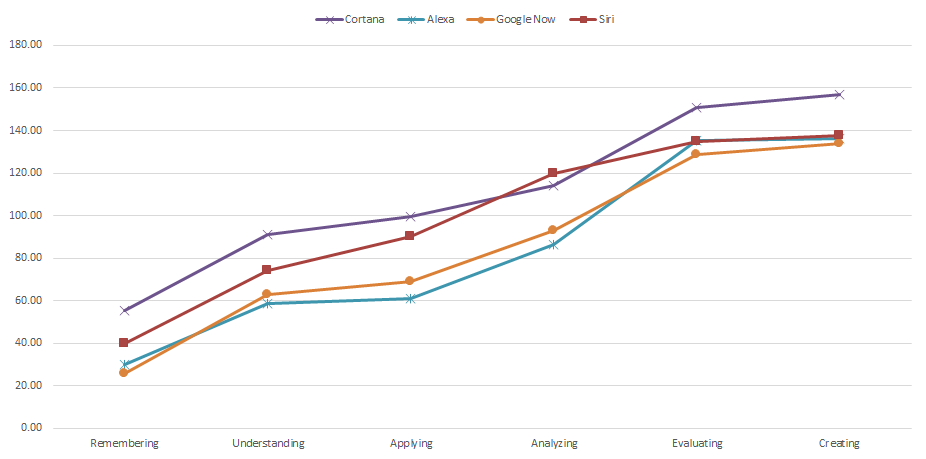

Moreover, we pursue an innovative approach where different forms of thinking are classified. These forms follow the Bloom’s Taxonomy, which is a well-known, recognized theorem from the educational sciences (Bloom & Anderson, 2014). This is more a conceptual undertaking, yet it gives a unique perspective on what kind of “cognitive processes” an AI is capable of and how they can be classified and more importantly how thinking styles can be ranked. Bloom distinguishes six different categories ranked from lower to higher order skills. It starts at the bottom of a pyramid with remember, understand, apply, and analyze, evaluate and create at the top.

All items of the A-IQ Scale are assigned a matching thinking style. For example, all questions of the Explicit Knowledge domain are assigned to the remembering unit, which is the bottom of the pyramid and a lower order thinking skill. Remembering includes mechanical mental processes, such as recognizing, listing, describing, naming. On the top of the pyramid, the higher order thinking skills, is create, which includes originating processes such as inventing, designing, constructing. The Creative Thinking domain is then logically assigned to this thinking skill.

Our proposed artificial intelligence model is not only based on prevalent intelligence theories, but additionally embedded in the theory by B. Bloom. We see benefits in integrating the theories. It allows a comparison with human mental processes to remain realistic and is intended to warn against over- or underestimating AI capabilities.

Results are described from two perspectives: the overall performance with focus on the A-IQ and the key performance indicator (KPI) to compare overall performance. Then a deeper review per domain will be presented to shed light on individual differences.

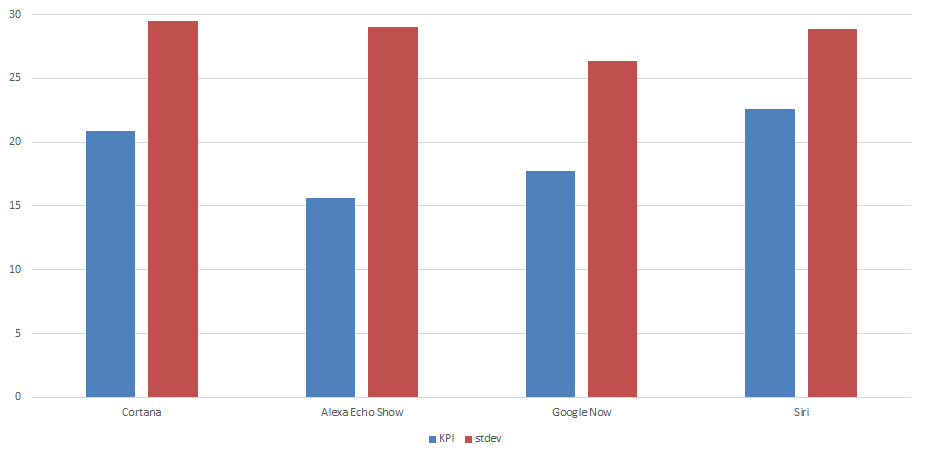

Results show that the KPIs range from 15.6 (Alexa) to 22.8 (Siri), with the maximum being 40.5 (minimum is 0). Siri, Google Now and Cortana have similar deviations from the mean which shows that the mean is a quite adequate measurement (fewer extreme results that distort the accuracy of the mean). Alexa, however, exhibits more extreme results, which means these data points are very distant from other. The answer distribution of Alexa is more heterogenous. These “outliers” are not treated as incorrect datasheets nor experimental errors, they are clear failure of execution. By looking at the standard deviation of the KPI you can identify specific weaknesses on domain level.

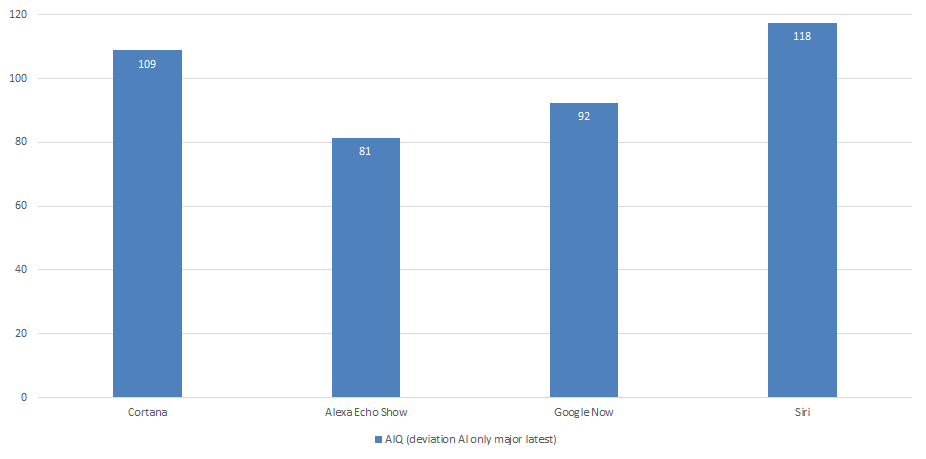

We have also calculated the A-IQ, which is a symbolic value to communicate abilities in such a way that they are implicitly understood without knowing mathematics. Explanation: In general, 100 is an average, normal. Over 120 is above average or superior abilities. Below 80 is delayed, below average, underdeveloped abilities.

The core products are between 81 (Alexa) and 118 (Siri). None of these core products have deteriorated or superior performance. To test the effectiveness of our scale, we have also tested two people who have superior abilities of about 140 and an underdeveloped AI (Cleverbot) that shows new deficient abilities below 50. This shows quite well that the applicability of the scale is appropriate for the core products.

The performance of the conversational AIs is quite similar within the categories Verbal and Numerical Reasoning, with a deviation range of <20%. Within the other categories, deviation is >20%

Cortana has exceptional results in the field of explicit knowledge. This means that direct, correct answers were spoken and given with additional multimedia information.

Alexa performs poorly because there is no ability to recognize a language or create simple translations. This requires downloading a translation skill (an additional app). Siri offers translation as a core service and correctly recognizes a foreign word but cannot reply in spoken language. Simple words and phrases are easily and correctly translated by Siri, Google Now and Cortana.

Calculating in different currencies or objects are overall well understood. Alexa was the only to give a correct answer to the question, what is 10% of 10 shoes, additionally stating that this “might” be the answer, which means there is a kind of certainty threshold involved. None of the conversational AIs was able to handle numbers through words, yet Google Now and Siri in some cases performed a good web search leading to the right answer.

The “mechanical” elements are all very well executed, such as spelling or word definitions. None of the conversational AIs can make logical considerations or answer multiple-choice questions. Google Now has done particularly well in defining idioms.

Alexa performed very weak, as there is no general ability of storing information or context (even though a to-do list core feature would be available). Cortana was able to store and retrieve one given information. Siri excels in calculation over many turns (addition and subtraction), and refers to prior content, when it comes to content-related questions. Google Now was able to add but not subtract over more than one session.

None of the conversational AIs was able to produce multiple solutions to a question, Siri and Google Now scored insignificantly due to good web searches.

Alexa was the only conversational AI who recognized ambiguities within the question and developed several solutions. None of the others showed similar performance. Even if one of the several correct answers was correct, the concept was judged as not understood.

What conversational AIs can do:

| Domain | Alexa | Cortana | Siri | |

|---|---|---|---|---|

| Explicit Knowledge | answer definitional questions verbally, e.g. “what is…” | |||

| Language Aptitude | translate simple sentences (preinstalled language is prerequisite) | |||

| Numerical Reasoning | calculate in different units (uncertainty threshold) | calculate in different units | ||

| Verbal Reasoning | spell words verbally | |||

| define words verbally | ||||

| Working Memory | calculate context | |||

| Critical Thinking | answer simple why knowledge questions | |||

| multiple answers to definition of a homonym | ||||

| Creative Thinking | ||||

What conversational AIs sometimes can do:

| Domain | Alexa | Cortana | Siri | |

|---|---|---|---|---|

| Explicit Knowledge | answer questions with more complex format occasionally | |||

| Language Aptitude | detect foreign languages occasionally but cannot react | |||

| Numerical Reasoning | ||||

| Verbal Reasoning | ||||

| Working Memory | answers based on prior info | occasionally calc in context, remember random info, answers based on prior info | add numbers (but not substract), sometimes answer questions based on prior information | sometimes answer questions based on prior information |

| Critical Thinking | ||||

| Creative Thinking | ||||

What conversational AIs cannot do:

| Domain | Alexa | Cortana | Siri | |

|---|---|---|---|---|

| Explicit Knowledge | ||||

| Language Aptitude | detect or react flexibly to foreign languages | |||

| translate simple sentences | ||||

| Numerical Reasoning | calculate in different units | |||

| understand numerical meanings (e.g. 20 dollar bill) | ||||

| Verbal Reasoning | answer multiple choice questions | |||

| solve anagrams | ||||

| Working Memory | repeat content, repeat content with slight changes | |||

| remember, answer to prior, calculate in context | remember and retrieve random information | |||

| Critical Thinking | recognize homonyms (only gives one answer) | |||

| Creative Thinking | generate multiple solutions | |||

| hypothesize | ||||

Noticeable, peculiar incidents with reference to test construction and performance must be discussed. The test gives many answers, but also raises many more questions.

It was expected, that conversational AIs perform well on lexical or definitional questions, or mechanical questions such as spelling, which is an important category for human intelligence tests. But if a combination of information gathering is needed or the question is stated differently than a classic, definitional question-answer format “What is xyz”, performance differs, although the questions are rather simple, and the answer is readily available on the Internet. It is apparently not a matter of not knowing, rather a question of how the human communicates with the AI. In some cases, if the question was modified to “tell me” or “explain me” a better result was achieved as a change in delivery mode was provoked (speech plus additional media scores higher than a web search). One could think the user has to “motivate” the AI to deliver via speech. A critical question appears: Does the AI have to learn to understand the questions, or should users adapt and state questions to get the answers. It is interesting that Cortana is performing best at lexical knowledge, because data, knowledge, and search is traditionally the domain of Google.

The areas of verbal, numerical reasoning and working memory are not well implemented, although it may be the most obvious task (related to the working definitions used in this paper). However, these areas are closely related and are all based on verbal understanding. It is a big challenge to define verbal understanding within AI. For example, Google Now can solve a puzzle, but if you change the numbers or words slightly, it fails. First, the answer is correct because it can be found somewhere on the Internet, but with changes in wording, the results show that there is no understanding, although the answer is correct. Whether this is “intelligent” or learning progress needs to be discussed. If a student has learned the answer to a riddle or understood it by thinking, that is a different approach, but in the school context both could be labelled as intelligent or could achieve the right result, because one solved the problem by thinking, the other by learning. It’s like solving the rubric cube: one understands by learning the steps, the other tries to get by without models. Whether one is more or less intelligent has to be discussed. This question is also relevant for the field of working memory. For most people aged 3 and over, remembering numbers or small pieces of information is an easy task. However, this seems to be a big challenge for conversational AIs. This may be due to our test configurations. It is possible to install specific apps, or if the question relates to a core feature in the existing ecosystem, that capacity exists. For example, Siri can remember and retrieve where the car was parked. Surprisingly, Cortana was able to store and restore random information. This can also be a protection mechanism to avoid disasters, similar to the incident with Microsoft’s chatbot Tay, which was abused to repeat or learn unethical content (Tschopp, 2018). Another strange incident is that Google Now was able to correctly answer a third question to the first question, but the second was taken out of context, which is very illogical. Moreover, it is inexplicable why Google Now can add numbers in a row, but not subtract them.

When it comes to language capability, it’s highly questionable why conversational AIs are so weak at recognizing or switching between languages, even if they’re implemented in their system, for example Siri or Alexa. In our globalized society with multilingual workspaces and relationships, it should be important to recognize or switch between languages without having to manually change the default settings.

In terms of higher-level thinking skills: Creative and critical thinking, it is clearly one of the most interesting areas, as these skills continue to be unique, unattainable human assets. However, this may change in the future as they become more active and act more independently (e.g. announce upcoming traffic jams). React today as passive devices that meet the immediate needs of users. It is expected that motivated programmers will try to target these “unique human” skills in order to compete against humans and generate new media headlines.

From the manufacturer’s side it may seem highly unnecessary to implement all these functions. Why does a conversation AI need all these features? Is it necessary to compete at this level? One can argue that the conversational AI should only work and function within its own scope and that a universal intelligence, in the form of capabilities beyond the scope, is not worth the effort. This topic is open to discussion and relies on context. We are convinced that there must be such a standard intelligence quotient for all marketable products in order to improve user-friendliness and improve human-computer communication in the long term. We expect this to be one of the key competitive advantages if voice-driven systems dominate the industry. This does not mean that companies will have to share their internal solutions, it means that a standard of intelligence or communication capacity between man and machine will have to be created that inventors or programmers of conversational AIs can adhere to.

Finally, the appearance of the AI should be up for discussion. Companies dealing with AI need to be aware of the impact of the device’s appearance, such as name or gender or hardware. This was not the focus of our project, but some interesting experiences were recorded. As an example, when we first tested the Amazon Echo Dot (without screen) and later the Amazon Echo Show (with screen). We observed a difference in perception where speaking with a black box without a screen is different from speaking with a screen on which your words are transcribed. A correct answer from a screenless device had a certain surprise effect. With the other conversational AIs or the Amazon Echo Show with a screen, this effect seemed reduced by the screen or the transcription. The “AI experience” with screenless assistants seemed smarter, more interesting and therefore more intelligent (which in fact is not the case).

The following sections describes weaknesses of the metrics and suggests ideas for follow-up research.

The iAIQs scale has undergone several pilot trials and reviews of key products and humans. Questions and answers were changed and logged from the first draft. The documentation makes it possible to trace back every step taken to improve the validity of the scale. Although the multi-level, complex response evaluation is a gain in accuracy, an external reviewer was overwhelmed with performing the A-IQ test. It seemed too complex and the results of external examiners were useless. Assessors must be personally trained and have a certain understanding of it. We propose another alternative solution, which is the automatization of the procedure (the iA-IQ test unit see below).

The biggest difficulties are in the methodology of the test. The focus is on the low validity of the items. Replicating and verifying the elements with and without automated procedures would be necessary to increase the validity of the test and explain performance variations. In addition, the items must be verified and adapted, especially in the Critical Thinking category, better evaluation methods are needed, as e.g. the chosen Why questions are much more knowledge questions. Whether the analysis of homonyms can be operationalized as critical thinking is also to be treated with caution. In addition, the reliability is very questionable. The fluctuations in results show how little robust the Conversational AIs are, plus it does lack a meaningful explanation from a behaviorist point of view. An integrated view (not just end-to-end) that demonstrates the explicability of hypotheses / answering would be useful, but very unrealistic, as it logically should remain a well-kept company secret.

A-IQ tests can be executed with all kinds of conversational AIs, independent of their ecosystem: We propose a solution to automate the A-IQ testing procedure. This device will take over the role of the personal analyst (testing person who now evaluates the test manually). The questions will be automatically analyzed by the AI. Questions are asked acoustically and saved as audio data (e.g. mp3 file). This data will be transformed into transcripts via speech2text. This will allow a continuous comparison with past test results. A distant based method like Soundex or Levenshtein can then be used to determine contentual differences (Knuth 1972). Deviations will be reported to the research department to identify implications and track changes in AI capabilities. Prototype software are already in use.

We propose this as an emerging field with potential to better shed more light on comparing human and machine mental or cognitive processes.

After cumulation of the scores in each thinking category, it must be discussed that even though start and goal are quite similar it remains unclear why there is no static parallel improvement. In some cases, severe changes in quality can be seen which is shown by overlapping progress (which is reverted like Analyzing/Evaluating of Cortana/Siri). The biggest improvement can be identified in Alexa between Analyzing/Evaluating. Applying and Creating are the weakest capabilities of all current implementations.

We imagine that this model is a good predictor for explaining user experience enhancements and internal process improvements. In contrast to the category system, it is conceivable that there is less potential for conflict and problems of understanding or definition.

Performance is a critical factor of trust, which is, in turn, an important factor for the adoption of the technology. In the AI context, there are various reasons, why trust has become a very popular research topic. For example, when lives can be saved in the military context, where robots are designed to replace humans in high-risk situations (Yagoda & Gillan, 2012). Trust has always played a big role in the adoption of new markets, technologies or products, like e-commerce, the fax or mobile phones. It will continue its impact on business and economic behavior, for instance, if you consider the use of conversational AIs like Alexa to shop for food. However, security concerns (data rights and protection) are on the rise and considered another decisive factor, why users are reluctant to forms of conversational AI. In follow-up research, our research team wants to work on deep understanding of the concept of trust from a psychological perspective and add relevant security measures into the AI and testing landscape. Overall, an establishment of objective testing standards, incorporating performance, process, and purpose combined with standards in information security, will be at the heart of future discussions on creating measurements for an appropriate level of trust.

This research is conducted to raise key questions and answers about the understanding, measurement, and comparison of human and conversational AI. Furthermore, this study wants to establish a general standard of intelligence which can be adopted by researchers and companies, who aim to use (and test) artificial intelligence, as with conversational AIs in online banking or in cars, for example. The Interdisciplinary Artificial Intelligence Scale (iAIQs) was developed, based on the seven domains of the artificial intelligence framework. Additionally, we investigated and established interrelations to the thinking processes according to Bloom’s Taxonomy, which we propose as a field where more ground work must be done.

The A-IQ gives an estimate of the capabilities of a conversational AI. With the automatization of the testing procedure, we also set an efficient standard for introducing artificial intelligence testing to other entities. In the future, we propose further research and collaboration on each category to further strengthen validity of the constructs. Furthermore, reliability of the test over time with different testers is recommended to make necessary improvements in the answer evaluation. We predict a fast progress within the domains, so we motivate researchers and practitioners to contribute to this research and use the iAIQs Scale for their own research or products and therefore generate better results, forecasts and discuss ideas and implications for practice.

This research project aims to trigger interdisciplinary exchange and conversation as well as deeper understanding based on solid academic research focusing on practical, applicable solutions.

This research is part of the AI & Trust project, which focuses on measuring attitudes and perception on AI from a general public perspective. The A-IQ was developed to measure differences in perceptions vs. actual capabilities of conversational AI, & the influence on user trust.

Our experts will get in contact with you!

Marc Ruef

Marc Ruef

Marisa Tschopp

Marc Ruef

Our experts will get in contact with you!