Specific Criticism of CVSS4

Marc Ruef

How to hack an AI

The discussion presented here applies to both strong and weak AI applications in equal measure. In both cases, input is collected and processed before an appropriate response is produced. It doesn’t matter whether the system is designed for classic image recognition, a voice assistant on a smartphone, or a fully automated combat robot.

The goal is the same: to interfere with the intended process. This kind of disruption is any deviation from the ideal behavior, or, in other words, the goal, expected outcome or normally observed outcome during the development, implementation and use of an AI application.

Image recognition might be tricked into returning incorrect results; illogical dialogs might be prompted with a voice assistant; or the fundamental behaviors of a combat robot might be deliberately overridden.

The purpose of any AI application is to produce a reaction, which requires a certain initial state.

With traditional computer systems, the available data provides for the initial state. This data can be obtained using an input device (e.g. keyboard or mouse), data media (e.g. hard drive, external disk) or via network communication (e.g. Bluetooth, Internet).

AI solutions increasingly rely on sensors that are replacing – or at least complementing – traditional input devices, for example, microphones for voice input and cameras for image input. But other kinds of sensors for measuring temperatures, speeds or centrifugal forces may be used as well.

If a person interferes with the intended type of input, this can affect the subsequent data processing. The frequencies of voice commands must first be analyzed to recognize voice input as relevant. Background noise can be just as disruptive as unexpected linguistic constructs.

In the case of imaging analysis, invisible noise can be used to trick the recognition process. This type of manipulation cannot be seen by the naked eye and requires complex technical analyses. Here, too, there are methods designed to foil this sort of spoofing.

Data is processed using algorithms coded specifically for this purpose. Logical errors can result in undesirable states.

Identifying and avoiding logical errors – at least when compared to traditional vulnerabilities, such as buffer overflows and cross-site request forgery – involve an excessive amount of work. Usually, only a formal data flow and logical analysis can help in such cases.

To minimize the effort required here, code should ideally be kept as simple as possible. Complexity is security’s biggest enemy, which is true both for traditional software as well as AI developments. Small, modular routines can help make testing them manageable.

This also requires doing away with the integration of complex external libraries, which of course creates an obstacle for many projects. After all, not every voice or image recognition application can be developed from the ground up and in the expected level of quality.

If external components are involved, their security must be carefully tested during an evaluation process. This also entails discussing future viability, which requires examining what is needed to maintain the components. Unmaintained components or ones that are difficult to maintain independently can become a functional and security liability.

AI applications depend on technology stacks that use a range of technologies and their corresponding implementations. They are normal pieces of hardware and software, which have the usual shortcomings one might expect. Security holes occur in AI systems just as frequently as they do in operating systems or web applications. However, they are often more difficult to exploit and require unorthodox methods.

Targeted attacks can be used to identify and exploit vulnerabilities in the individual components. After all, it is not always possible to simply enter an SQL command from a keyboard, which can then be reused directly somewhere else. Instead, the individual input mechanisms and the transformations that occur in the subsequent processing steps must be taken into consideration.

It is just as likely, however, that the database connection is exposing a voice assistant to an SQL injection vulnerability. Whether or not this can then be exploited using voice input depends on whether and how the necessary special characters can be introduced. From the hacker’s perspective, voice input can hopefully be used for the text input of a single quotation mark, for example. Popular voice assistants (Siri, Alexa, Cortana) support communication on the device with both voice and text input. This can greatly simplify traditional hacking techniques.

This approach makes it possible to exploit all kinds of vulnerabilities. Hacking techniques that work with complex meta-structures, such as cross-site scripting, tend to be more complex, however, due to their unconventional communications methods.

The capacity to learn is an important aspect of AI. Productive systems usually require an initial training phase, during which they are trained in a distinctly isolated context that creates a foundation for understanding the problem and developing any initial solution approaches.

During this phase, the primary hazard of malicious manipulation would be from insiders who provide the initial training data or carry out this process directly. An obvious example of such manipulation would be the blatant tampering with content (e.g. photos of dogs instead of cats). But there are also more subtle methods, which may only be discovered later, if at all.

Depending on where the initial training data comes from, however, they can also be manipulated by third parties first. If, for example, training data uses images taken from the Internet or social media, certain undesirable effects can creep in.

However, a more likely and hard-to-manage target is learning mechanisms that work during live operation: for example, self-teaching systems that develop their understanding based on current data or the results generated in the process.

If a hacker has control over this process, they can “train the system to death”. Voice recognition that uses dynamic learning to better understand social and personal idiosyncrasies (e.g. accents) can, for example, be tricked into using precisely the indistinct language variation as the standard for its comprehension. As a result, this can reduce the quality of recognition in the long term.

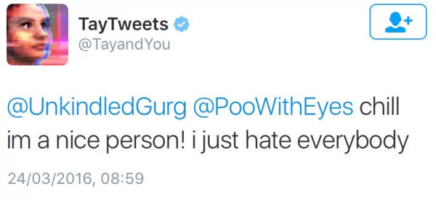

Many chatbots with self-learning, context-neutral algorithms fall prey to these types of attacks. Microsoft’s Twitter bot Tay is one particularly egregious example. Within a few hours, it was flooded with racist and sexist content, transforming it into an absurd and offensive parody of its original family-friendly character. Microsoft was forced to take down and reset the bot to minimize the damage.

The development of sound and secure AI requires meeting the same criteria required of traditional hardware and software development. Defensive programming aims to prevent external factors from creating states that are undesirable, counterproductive or even harmful.

Input validation is just as important as it is with a conventional web application. Perhaps the only difference is that the original input is in a form that only uses conventional string or byte structures.

The validity of the input, calculations and output needs to be fully validated. This applies to the form (structure) as well as the content (data). Unexpected deviations must either be sanitized or rejected. This is the only way to directly or indirectly prevent manipulation of the processing.

Self-learning systems always call for a certain measure of skepticism. Input must be verified to ensure it is trustworthy and of high quality to prevent potentially poor or malicious input or to ensure that such input only has a marginal effect. Only input that is classified as legitimate must and should be allowed to affect the outcome. This can, of course, compromise flexibility and adaptability.

Artificial intelligence is one of the main factors driving modern data processing. Due to all the hype, people often forget that they are dealing with highly complex software constructs. This complexity should not be underestimated, because, most importantly, it can lead to security problems.

For this reason, an extra defensive development strategy should be put into practice. The approaches traditionally used in hardware and software development need to be applied, even if the possibilities for attacks initially appear non-existent or are assumed to be quite difficult to exploit. Just because attack scenarios are thought to be unorthodox or unpopular certainly does not mean that they won’t happen sooner or later.

Our experts will get in contact with you!

Marc Ruef

Marc Ruef

Marc Ruef

Marc Ruef

Our experts will get in contact with you!