Specific Criticism of CVSS4

Marc Ruef

How to Collect Passwords in Data Markets

This article discusses the collection and evaluation of passwords to implement analytics. To do this, several challenges must be faced. First, the leaks must be found, collected, normalized, and checked. Only then can they be efficiently imported into a database, which must be designed and optimized beforehand. In the end, this makes appropriate queries and analysis possible, which can be used in both the defensive and offensive areas.

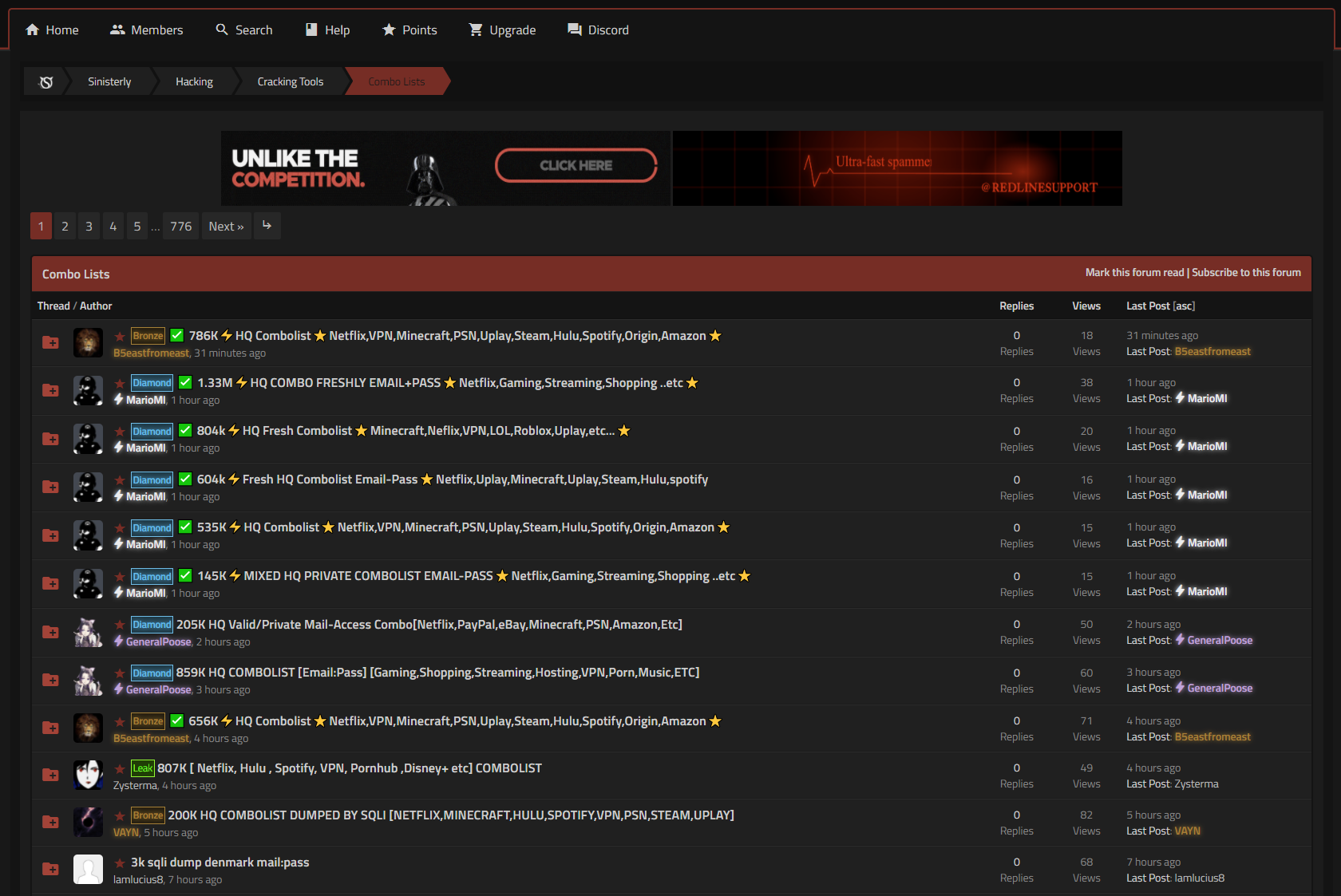

The evaluation of password leaks begins with the collection of them. Thus, one must come into possession of the leaked password data. This is usually done on the respective data markets. Various data, including password leaks, are traded on these. There are also marketplaces that exclusively trade such password leaks. The transactions can be carried out in the markets themselves or on other platforms, for example Telegram has become established in recent years.

A distinction is made between two types of markets:

Interested parties sometimes tag their posts in the subject to make their intent clear:

Especially where cutting-edge and highly volatile data is traded, access is often difficult. Mostly invites are assumed. A person who is already accepted in the market invites a new person. In this case, the existing user vouches for the new user. If the latter causes problems, the former will also lose his status.

Once infiltrated markets are regularly searched by us for new or exciting leaks. We are also using custom bots with textual analysis capabilities to determine interesting offers and exchanges. As soon as these are found, they are downloaded and filed for later processing.

Older leaks that have already been sold and traded several times will eventually be made available to a wider audience free of charge. This can also happen in semi-public forums, on file sharing sites or peer-to-peer networks (e.g. torrent).

An enormous challenge is normalizing the leaks. The data must be checked and put into a consistent format so that in a next step the import can be implemented as efficiently and smoothly as possible.

Leak data comes in a variety of file formats. In isolated cases a full compromise of an organization took place, so that different files of different formats are given. These can be emails or office documents. Sometimes entire DB dumps are provided, usually as SQL exports, of course with unique tables and columns. In both cases, individual parsers are required for normalization. This can sometimes turn out to be highly complex to deal with the unstructured data formats.

Ideally, and this is always preferred in password trading, so-called combo lists are provided. This is in the broadest sense a CSV file. One data record is stored per line. This consists of a data pair, which usually consists of two data parts. Typical examples:

mail address ↔ password

username ↔ password

The data parts are separated by a separator. Typically a colon : is used and sometimes a semicolon ; is resorted to. Only in rare cases other separators (for example \t as tabulator) are used. It happens again and again that separators change in the middle of leaks. Especially when it comes to compilations, which are not uncommon in combo lists. Detecting and correcting these can be a very tedious task.

It is also necessary to distinguish between leaks that use the terms NOHASH and HASH. NOHASH refers to passwords that are given in plain text. Either they were already included in the original leak or they were decrypted by password cracking. With HASH, no passwords are offered in plain text, but password hashes. These have to be cracked first. In isolated cases, NOHASH+HASH is displayed, where both variants are contained in a leak.

In any case, a careful check and possible normalization must take place, because this data was also normalized beforehand. Cybercriminals do not always strive for perfection, which is why their preparation is not infrequently faulty. This can lead to problems in the later step of the import, in that incorrect data is imported or existing data records are skipped (because they are incorrectly formatted). For example, combo lists sometimes show duplicate at characters in mail addresses (probably due to incorrect concatenation).

First of all it is important to find out how the file is structured: which data pairs are used and how they are separated from each other. With head -n 2 *.txt | grep -v "@" can be checked uncomplicatedly whether in the second line of a file an at-character @ occurs. This can be used to distinguish leaks with user names from those with mail addresses.

An exact check whether mail addresses are used everywhere is possible by grep -v '.\{1,50\}@.\{3,50\}:.\{0,50\}' *.txt. Here, all lines are searched and the potential mail addresses are analyzed for syntactic correctness. However, in many leaks syntactically incorrect mail addresses are used. For example, because someone entered only foo.bar@example.co (without ending m) instead of foo.bar@example.com. The question thus arises whether to ignore these records (the entire line), whether to save them in their supposedly broken format anyway, whether to correct them automatically, or whether to import only the correct part of the data pair (in this case, the password).

Ideally, an intelligent combination of these approaches should be implemented. The first thing to do is to correct incorrect structures. This can be done very efficiently with classical tools like sed and awk. If this is not necessary or a minimum of correctness is given, the defective data part is imported. Otherwise, only the correct part of the data pair is considered (e.g. password) and the incorrect part (e.g. mail address) is discarded.

An unpleasant difficulty is to be able to correct or remove once incorrect imported data afterwards. The relationality of the database makes interventions difficult. And the enormous amount of data that can be accumulated over time makes adjustments very time consuming.

The goal of data collection is to make a database fileable, analyzable, and searchable. To this end, raw leak data must be imported into a database. Before this can be done, a data model must be created. This specifies which data is stored in which form.

Combo lists, which are the preferred way to work with, are two-dimensional data structures. These table files could be used directly as a pseudo database. Compiled combo lists are stored and can be searched by classical tools like grep. Even with a big leak like ANTIPUBLIC #1 with its almost 2.8 billion rows this is possible. The big advantage of this quick-and-dirty approach is that it eliminates the tedious importing into a real database.

In exchange, disadvantages that increase with the accumulation of more data are introduced. Data will be stored with high redundancy. Although storage space has become cheaper and cheaper in recent years, this effect should not be underestimated. It is not uncommon for data sets to be contained in multiple leaks. Either because they effectively occurred in the different sources. Or because they were not filtered as part of merged combo lists. In addition to wasting storage space, any increase in the data set makes it more costly to analyze and search. Queries can take longer and longer, reducing practicality.

The goal should be to avoid redundancies as much as possible and to allow normal queries for single records within a few seconds. For this purpose a relational database is the best choice. In this database the data is stored in single tables, but also the relations to each other are stored in intermediate tables.

The following graphic lists the tables as they are suitable for the collection of mail addresses and passwords. The relative number of rows and the size of the storage space in MByte are given. The number shown behind a table name indicates the number of indices applied to the table.

Domains in tbl_domain and passwords in tbl_password are stored separately, preventing redundancy. Associations of passwords to mail addresses are provided in tbl_mail_password. Associations to sources in tbl_source occur separately with tbl_source_mail and tbl_source_password. This approach walks a tightrope between simplicity, efficiency, and relationality. This results in less redundancy and better performance.

Special attention should be paid to the creation of the index. These should be as small (short) as possible, in order to keep the memory load small. At the same time they should be efficient enough to avoid lengthy queries and high CPU usage. Especially multi-column indices should be optimized. With these, it is necessary to deal with the cardinality of the individual columns and the resulting prioritization . Index requirements can change over time. After at least 1 million imported records, it should be checked whether the index definitions still meet the current needs. It is important to keep in mind that deleting and regenerating an index can become very time consuming as the number of records increases (several hours per table).

After the leaks have been collected, normalized and the database prepared, they can be imported. During an import, the raw data is validated, converted and stored in the database. This allows appropriate evaluations and queries in a later step.

The combo lists are read in by a script and processed line by line, i.e. each data record separately. The delimiter must be known or can be identified, so that a split of the elements can take place. These are then to be stored accordingly in the respective tables. Likewise the relations between the elements must be converted in the intermediate tables.

Here an additional validation can take place, in order to recognize for example errors when validating and parsing the raw data and to react to it. It depends on the paradigm whether and in which phase (normalization or import) the appropriate measures should be taken.

One of the reasons why a relational database should be preferred is the efficient handling of duplicates. For simplicity, for example, tbl_domain can have UNIQUE defined on the name column. This prevents the same domain from being stored twice in the table. If a duplicate wants to be created, its INSERT is discarded.

In this case, the task of duplicate detection is efficiently performed by the database. At the same time, however, a full index is created on the field that was provided with UNIQUE. Given the amount of data involved, this can lead to an extreme index. This index can become so large that its processing (e.g. in RAM) is no longer possible or only possible at a slower rate.

For this reason it is recommended that the import script is responsible for handling duplicates. First it should check with a SELECT query if the supposed new data fragment already exists in the corresponding table. If this is not the case, it is added with an INSERT. If, on the other hand, the data fragment already exists, the ID is recognized as such and used to create the relationship. Duplicate detection of this type will take place there as well. Since the same data fragments can occur in different leaks, it is not unusual that only the data fragment itself already exists, but the new relationship to the leak does not yet.

Detecting and discarding duplicates means that fewer and fewer new data fragments need to be inserted. This promotes the speed of imports. In addition, over time, the chance that database bloat can also be prevented thanks to duplicate detection increases.

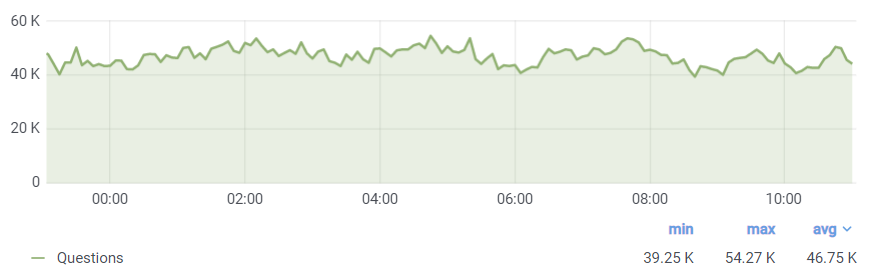

Experience shows that such an import cannot easily make use of the multi-core architecture of modern CPUs. The load will be very low with only a few percent of the total system and only moderate performance with a single thread. For this reason, a multi-threading import should be aimed for. It is worth increasing the number of threads to 90% of the number of CPU cores. The system will then apply the majority of the CPU load of one thread per core. Thus, a multiple of the speed can be achieved.

It is worth to optimize the settings for web server, PHP and database. All settings for connections, memory and index behavior should be taken into account. Since a large number of database instructions are executed within a very short time, minimal optimizations can have an enormous impact over a longer period of time.

On an HP DL380 G9 with two E5-2680v3, 256 GB RAM and RAID5 with SSD, up to 300,000 data records per minute can be processed after optimizations. The system has 48 cores. These allow the import of up to 432 million data records per day. Aiming for a speed of this magnitude is recommended to keep up with the rapid number of new releases of leaks and combo lists.

The moment the CPU utilization exceeds the 90% mark, the efficiency of the indexes should be checked again. If no further optimization is possible, the system has reached its limit.

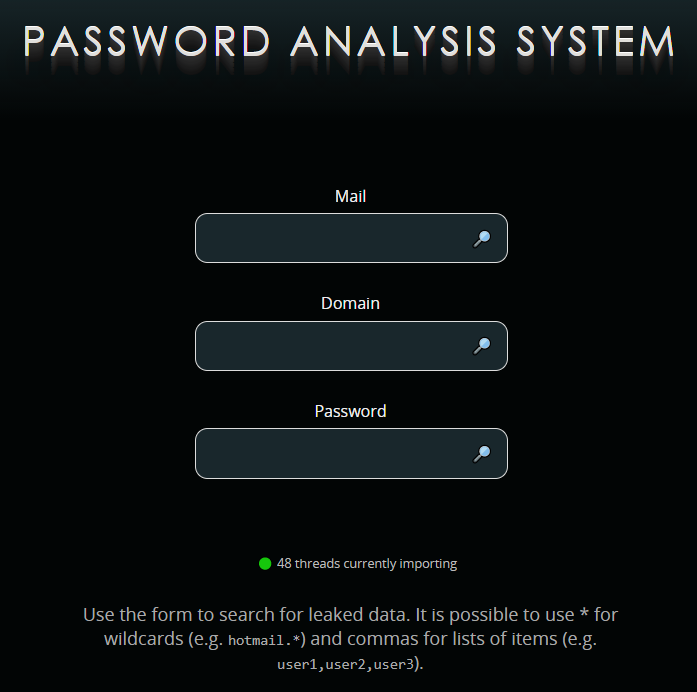

Once the data is entered into the database, it can be query. In order to achieve maximum comfort, the development of a Webfrontend is worthwhile. On this, corresponding data records can be queried via a form. Thereby leaked login data as well as associations with the corresponding leaks can be shown. To prevent misuse, it is worth hiding passwords and logging accesses.

The simple data structure of the tables means that targeted queries can be implemented very quickly. Querying specific mail addresses or less popular domains is possible within a few seconds.

Complex queries (e.g. with regex) and queries for extensive results (e.g. all mail addresses with the top-level domain .com) can be resource intensive. Even on the previously outlined server system, such extreme queries can take several hours.

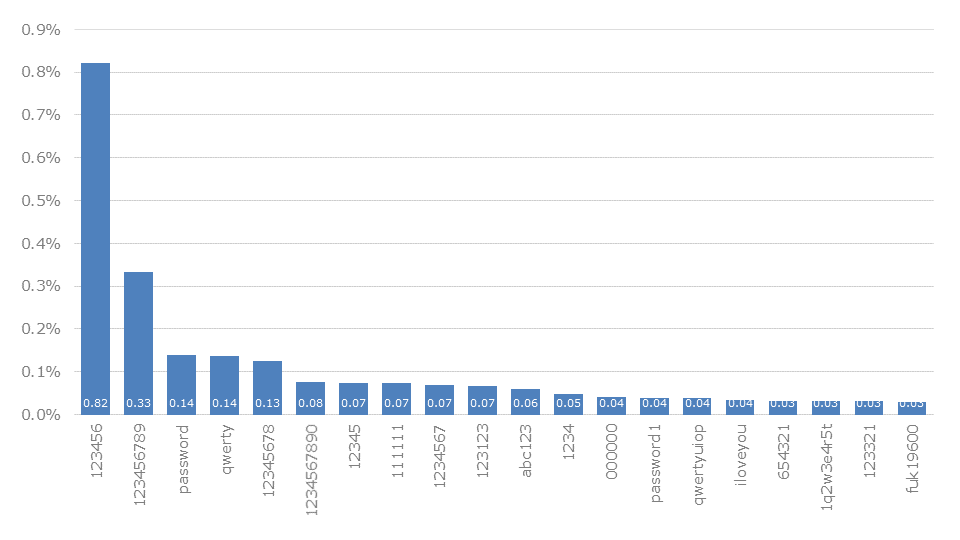

In addition to direct queries for individual records, advanced statistics can also be implemented. Thereby average password lengths can be determined and prioritized word lists for dictionary attacks can be generated. We make the latter in particular uniquely available in our public GitHub repository.

Evaluating password leaks brings several advantages. On the one hand, one’s own vulnerability can be determined and reduced. On the other hand, the acquired knowledge can be used in the context of red teaming tests.

But until these possibilities are achieved, various hurdles have to be overcome. Finding and collecting high quality leaks, and doing so in a timely manner, can be difficult. Then, various file formats must be normalized. This allows the import to go through without incident. Only in the last step can the effort be profited from through queries and evaluations.

Such a task is not suitable for every company. Not even in the security sector, because such a project cannot be run just on the side. But if you take on the effort, you can develop a powerful tool that you can fall back on in the future.

We are going to monitor the digital underground for you!

Marc Ruef

Rocco Gagliardi

Marc Ruef

Marc Ruef

Our experts will get in contact with you!