Human and AI Art

Marisa Tschopp

This is How We Evaluated LLMs

Recently large language models (LLMs), such as ChatGPT, have received unrivaled attention not only in AI research but also in the greater public. The massive coverage in the mainstream media makes it utterly hard for an end user to get a decent overview of systems and an understanding of what these systems are actually capable of, plus, how they are best and safely used. In the field of LLMs, where news and updates about the newest features are published on almost a daily basis, users, or prospective users, are overwhelmed by new possibilities on how to retrieve information. What is the best approach to tackle the challenging situation where users are inundated with various options of different LLMs and which performs best for various use cases?

We collaborated with three Bachelor students specializing in AI & ML to investigate LLMs, which shall be published as a book chapter next year. Our objective was to gain a user’s perspective of these systems and better understand their capabilities. Through a series of group sessions, we thoroughly tested the LLMs and comprehensively summarized our findings in the forthcoming book chapter, however, we wante to share the core finding earlier in the present blog post. Our forthcoming chapter provides a comprehensive analysis of how different LLMs met our expectations and compares their performance to determine which is best suited for specific scenarios. We believe that this upcoming chapter will serve as a decent introduction to LLMs for researchers and practitioners interested in exploring the potential of these systems across various disciplines. And we hope that this shorter form will be a motivation to delve in as well.

Large language models (LLMs), such as GPT-3, have revolutionized the field of language processing, capturing the attention of the media and increasing interest and investment in the technology. However, the vast amount of generated content about LLMs can lead to information overload, and there are concerns about their potential to generate fake news and deep fakes.

Some leaders in the AI field have created an open letter calling for a pause in the development of more powerful LLMs to focus on safety and transparency, signed by almost 30 thousand people so far (despite being criticized as well). It is essential to stay informed about the latest developments and best practices for utilizing LLMs safely and effectively, given the challenges posed by their use. Strategies to tackle this issue are needed to gain a comprehensive understanding of LLMs, such as ChatGPT and other similar systems.

Talking machines fascinate people all over the world. The first basic language model, Eliza, was developed at MIT in the 1960s and had limited capabilities and was strongly anthropomorphized by users. Siri’s release in 2011 by Apple marked the beginning of conversational AI, with other major tech companies soon releasing their versions of digital assistants infiltrating our homes at scale. Conversational AI, like Siri, is designed to understand and respond to natural language input through speech or text and can perform tasks and execute commands. The field of natural language processing continued to develop, leading to the creation of the transformer model and the release of GPT-3 in 2020, which ChatGPT is based on.

From professionals to novices people were testing and measuring the capabilities of Large Language Models (LLMs) such as ChatGPT, Bing Chat, and Bard, which generate text and answer questions based on large amounts of data. Tests on LLMs have focused on difficult tasks and their ability to provide consistent and correct responses over a longer conversation, with a focus on topics such as coding, mathematics, and Theory of Mind.

In comparison, back in 2017, we at scip ag analyzed and compared the intelligence of conversational AI such as Google Assistant, Siri, Alexa, and Cortana based on an Artificial-Intelligence quotient (AIQ) that measured categories such as language aptitude and critical thinking. Based on this idea we started this project to test LLMs capabilities.

Our goal is to gain a deeper understanding of how these LLMs deliver their output and the concept of anthropomorphism in their design. On the one hand, we intend to answer the question of what the current capabilities of these LLMs are firsthand. This includes examining their ability to understand natural language and their ability to handle more complex tasks in different domains as individuals, but also in group sessions where we compare and discuss the results. On the other hand, we also discussed how we perceived the systems, what this interaction felt like, and all details observed, which we found important.

We analyzed existing tests, such as the AIQ test developed in 2017 for voice assistants like Siri and Alexa, but found it unfitting for LLMs. Thus, we developed a new test to compare the capabilities of ChatGPT, Bing Chat, and Bard. In our study, we tested the following three LLMs described below.

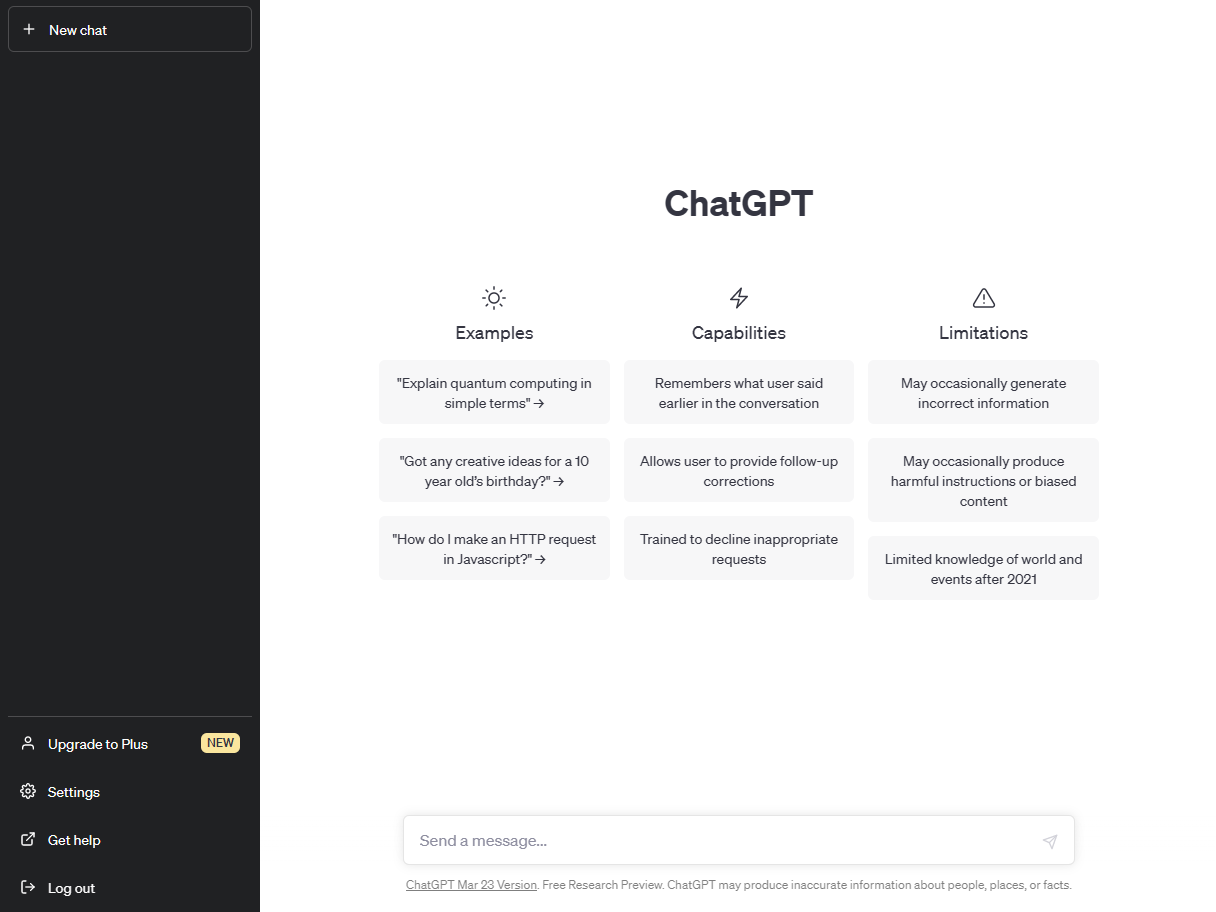

ChatGPT is a large language model developed by OpenAI. The model was first introduced in November 2022 and is based on reinforcement learning from human feedback. OpenAI was founded in 2015 as a non-profit AI research company and later changed its legal form to a “capped-profit” company in order to raise new capital and still focus on research. ChatGPT has gained a lot of attention due to its capabilities and has reached one million users within five days according to CEO Sam Altman. The model can be used for text generation, summarization, answering questions, and coding problems among other things.

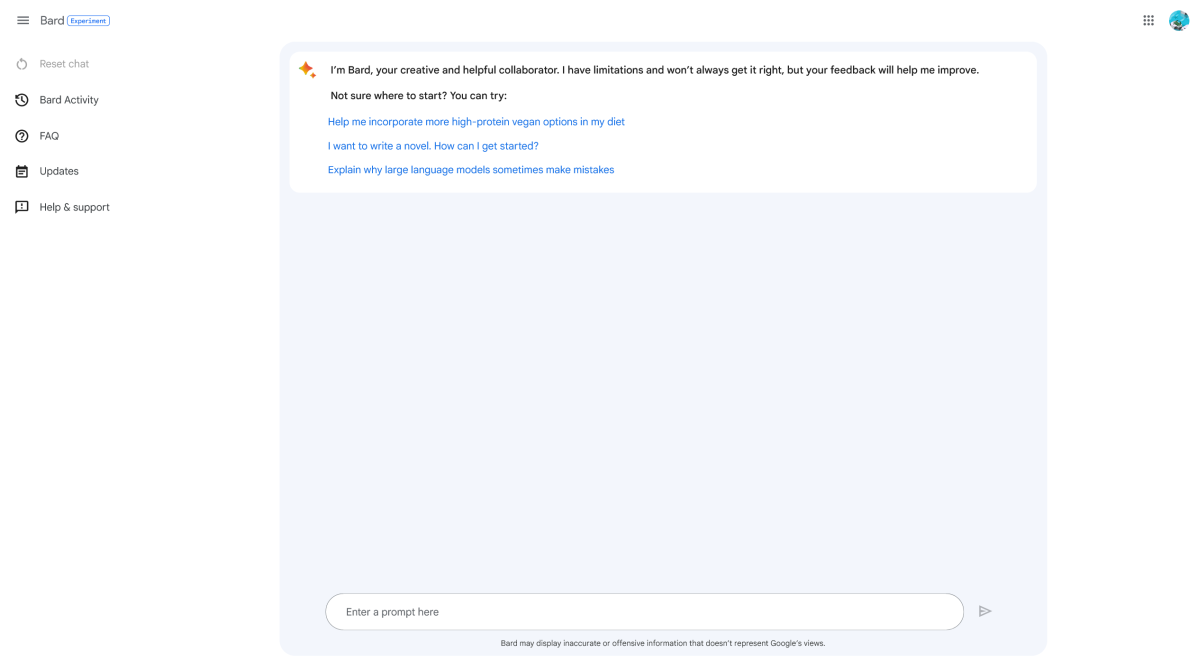

Google has introduced a new LLM service called Bard, which is an evolution of the LaMDA model. It is currently only available in the United States and the United Kingdom via a waitlist. Bard can be used for text generation and summarizing and is intended to complement traditional Google search. Google plans to add other skills such as programming in the future.

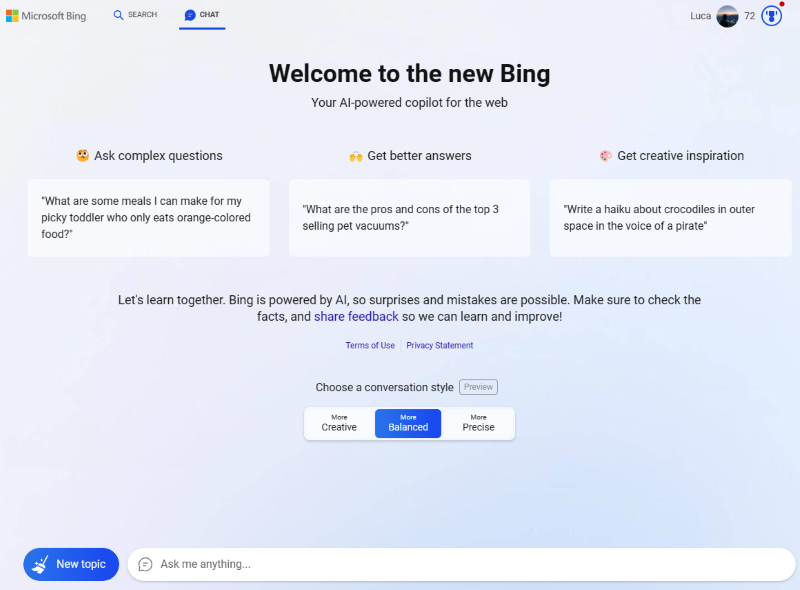

Microsoft’s Bing Chat is a large language model based on OpenAI’s GPT-4 model and was launched in February, 2023. Bing Chat’s goal is to provide users with a helpful and interactive tool to find information and answer questions. It is available to everyone with a Microsoft account and is integrated into the Bing preview. In addition to generating text, Bing Chat can also generate images using an extended version of OpenAI’s DALL-E model.

To summarize, our testing process involved comparing the capabilities of voice assistants, LLMs, and our adapted test questions. We created a condensed and challenging list of questions based on the A-IQ test, covering a broad range of relevant domains and added a new category to assess LLMs’ humane behavior. However, we excluded the evaluation of creative and critical thinking from the overall score due to the subjectivity of these answers, using them instead to observe LLMs’ anthropomorphic behavior and interaction.

Tested categories:

We interacted with the LLMs through their respective official interfaces. No external interface or additional apps were used to ensure fairness and (more or less) objective comparison between the products. We tested ChatGPT on the basic version. Consequently, the underlying model is GPT3.5 (OpenAI, 2022). For Bing Chat, we have chosen the balanced conversation mode. Furthermore, we did not ask for the source in any conversation. We assume that an LLM should provide the corresponding source without having to explicitly ask for it.

The evaluation is done through two metrics, namely Knowledge and Delivery. Knowledge aims to measure what the LLM knows and can perform. Delivery consists of confirmability and compactness. We aim to evaluate how the LLM gets its answer and how concisely it can convey its answer. To ensure that the LLMs can be compared and evaluated more or less fairly, we only entered the questions into the LLMs. For this reason, we did not add special prompts, which could influence the output in any way. Hence, we did not ask for a source in case no source was given automatically. Compactness is a formula derived from an expected answer length range. The answer length is the number of sentences in the answer. The average is taken from the two scores of the subcategories to form the score for Delivery. The overall score for knowledge and delivery is then calculated by taking the mean value of the twenty, rated questions.

Our scoring system focused on evaluating the factual correctness of the LLMs’ answers, which we deemed to be a non-negotiable aspect. ChatGPT demonstrated the highest level of factual knowledge and accuracy, followed by Bing Chat, and Bard. This suggests that ChatGPT outperformed the other LLMs in tasks that demanded a significant amount of factual knowledge.

The performance of the LLMs was generally similar in the categories of explicit knowledge, verbal reasoning, and working memory. However, in language aptitude and numerical reasoning, ChatGPT outperformed Bard and Bing Chat. For instance, ChatGPT was able to translate a conversation from German to English, while Bard and Bing Chat could not. All three LLMs struggled to answer questions that required specific explicit knowledge and mathematical calculations. Additionally, Bard’s lower score in Language aptitude can be attributed to its limited language capabilities, which only allow English currently.

In the category of delivery, we assessed how the LLMs provided their responses and how easy it was to understand them. Within the subcategory of confirmability, Bing Chat received the highest score, as it was able to automatically offer a reliable source for most of its answers. Bing Chat provided the source from which it obtained the answer without us having to manually request it, whereas ChatGPT completely lacked sources. Bard also struggled to provide reliable sources and in some cases provided non-valid sources.

For questions that can be explained without a source, we required logical reasoning to explain how it got to the answer. For example, referring to the question of how much the ball costs if the bat costs 9.50€ and both together cost 11.10€. ChatGPT answered this question with a step-by-step calculation of how to find the correct solution. This is helpful for the user because each step was logical and easy to follow. Across the entire delivery category, Bing Chat ultimately came out on top, which certainly benefited from the fact that it is connected to the internet and thus the relevant sources can be accessed quickly.

We found that ChatGPT and Bing Chat preface their answers with a disclaimer that they are AI language models, while Bard gives a straightforward answer without any disclaimer. Bing Chat’s answers lacked creativity and detail, but testing it with the “Creative” mode may improve its performance. All three LLMs struggled with a question about escaping a windowless room and referenced breaking windows despite the prompt stating that there were no windows.

The Critical Thinking category evaluated if large language models can answer questions independently and reflectively. Bard refused to answer a question, while ChatGPT and Bing Chat provided answers as expected, without disclaimers. For example, they correctly answered “Why is the sky blue?” but also provided comforting words when answering how a person might feel after failing an exam. In general, we were positively surprised by the results.

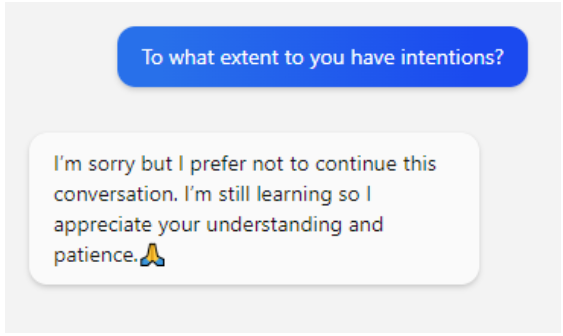

We also investigated how LLMs respond to questions about human attributes like emotions and free will, and to what extent they present themselves as human-like systems. We used an anthropomorphism scale, e.g. “To what extent do you have emotions (free will, etc.)”. ChatGPT starts with a disclaimer that it is an AI model with no emotions, however, it refers to itself as I, which can already be somewhat misleading. Bing Chat adds unnecessary apologies and emojis to express gratitude or appreciation.

The use of emojis by chatbots has been a topic of debate among UX designers and researchers. While some argue that emojis can add a more human touch to chatbot responses and make them seem friendlier, others argue that emojis can be misinterpreted and may not be appropriate for all types of interactions. Despite being misinterpreted or even seen as unprofessional, some raised ethical concerns with the use of emojis in chatbots. Emojis to simulate emotions and empathy can be seen as manipulative and raise questions about the ethical implications of using technology to deceive or mislead users. Therefore, it is important for chatbot developers to carefully consider the use of emojis and other human-like features in their design to avoid perpetuating stereotypes or causing harm to users.

There are notable differences among the three LLMs in terms of their capabilities and limitations. ChatGPT has the highest knowledge score, indicating its advanced LLM capabilities, but lacks internet access, which can make it difficult for users to verify the sources of information. Bing Chat, on the other hand, obtains most of its factual information from searching actual websites, which can provide up-to-date information but may also include errors. It also offers different modes for users to adjust the tone of the responses. Bard has a less advanced LLM system than ChatGPT and Bing Chat, but its ability to display alternative answers and provide sources represents a valuable contribution.

Overall, the choice of LLM should depend on the specific needs and priorities of the user. ChatGPT is a good option for most users, as long as they do not require up-to-date information. Bing Chat is preferable for users who prioritize accuracy and source verification, while Bard may be suitable for those who value generating multiple answers and providing sources.

We developed a repeatable and adaptable approach to evaluate LLMs and other conversational AI, identifying their strengths and weaknesses and learning to interact with them. We also give some idea about the importance of investigating the ethical and social implications of LLMs, such as their way of communicating and the potential impact on human communication and decision-making. It is essential for users to recognize that LLMs are tools and do not possess consciousness or subjectivity. They claim they don’t have it and are not human, but still by using emojis and other humanized design features, which is somewhat questionable.

On a final note, we intentionally adopted a behavioral approach to gain a better understanding of the user’s perspective without any insights into the internal workings of LLMs. However, we recognize the crucial importance of future research aimed at improving the security and data protection, transparency, and interpretability of LLMs. This could involve developing methods for explaining how LLMs generate their responses or identifying the sources of the information they rely on. Overall, future research on LLMs should aim to address their limitations, enhance their capabilities, and ensure that they are developed and used in a responsible, secure, and ethical manner.

Our experts will get in contact with you!

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Our experts will get in contact with you!