Enhancing Data Understanding

Rocco Gagliardi

Firewall systems are the oldest elements in the network security. With ongoing use they were surrounded by many additional security systems but remain a central element of the company security.

As explained by Marc Ruef in Firewall Rule Review – Ansatz und Möglichkeiten, scip AG performs firewall rulebase reviews using self engineered models and tools; please refer to this lab for details.

In this lab, we will briefly discuss the output of our analysis.

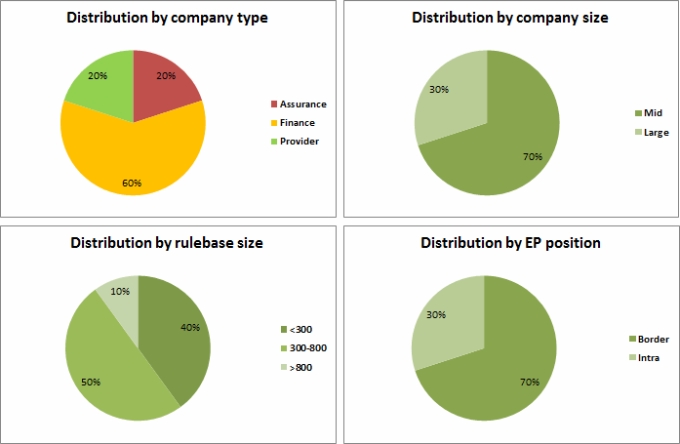

The numbers are calculated using a subset of 30 analyzed rulebases, selected by company type and size, rulebase size and enforcement point (EP) positions (border firewalls with internet exposition, second level or internal firewall):

Our tool searches for vulnerabilities in each rule; each vulnerabilitiy has some numerical values associated, some of them can be compared across different rulebases and are useful as indicators.

The vulnerability-weight (vw) tries to measure the severity of the identified vulnerability. For each finding, the value is added to the global rule-weight (rw). The resulting rw gives a numerical indication of how problematic the rule is.

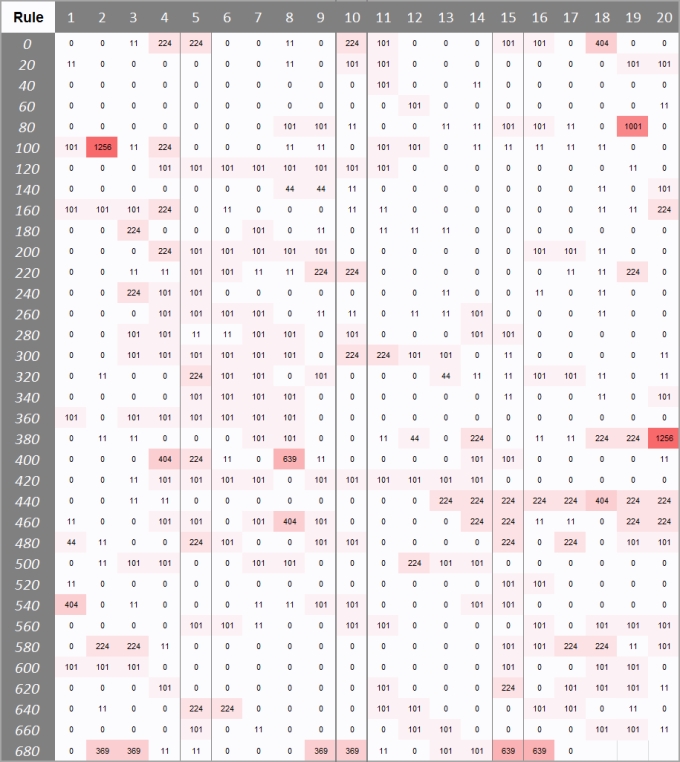

The simplest numerical indicator for each rule is the rw. The whole rulebase can be displayed as a heatmap, with the rw as temperature indicator as shown in the following table:

The heatmap may show problematic rule-clusters, as example created for a specific application implementation. Example of rule-cluster candidate for closer look are:

Heatmaps are very rulebase specific, but we can use the data to extract some indicators:

max(rw)avg(rw)sum(rw)The first two are comparable across different rulebases, useful to benchmark the rulebase versus sector or averages rulebases. The last one make sense only for the same rulebase and can be used to measure if the rulebase evolves in the right or wrong direction.

During the review, our tools perform hundreds of checks on rulebase core elements (rules, objects, services). We have categorized these checks in Areas described as:

| Area | Description |

|---|---|

| Lazy-tracking | This check group basically tries to find out if the rule is well tracked. Administrators tend to aggregate protocols – especially administrative protocols – in few rules, then disable tracking for a rule because an snmp fills the log but at the same time the rule allows also ssh. |

| Over-Traffic | Sometime we find bi-directional rules, most of which are not really needed: normally, just the traffic in one direction must be explicitly allowed. This check group recursively compares each rule versus all remaining and points out all suspected full or partials rule-pairs. |

| Readability | Not very frequent, but sometime we encounter a big list of objects defined as source, destination or services. Sometime, the complete rule cannot be displayed on the monitor forcing the user to scroll up and down to search a specific objects. After a while, the rule becomes a blackbox. |

| Lazy-objecting | Very frequent, objects are not strictly defined; even if only a single host needs access, the whole network is configured in the rule. This check group points to big objects looking at the number of IPs or ports involved. |

| Temp/Test | Also frequent, created for quick problem resolution or for temporary access, the objects remain in place sometimes submerged in a group. |

| Mix | A mix of temporary/test objects with sensitive objects – like administrative objects – is present in some rulebases and is very difficult to find. This check group looks in rule comments, object comments, known services to point out all suspicious combinations for a closer look. |

| Quality | Basically, we try to measure the quality of the management processes used to manage the rulebases, looking at content of the comments, rulenames etc. Very oft, we encounter redundat or void comments or no reference to a management document, making the rule obscure. See Structuring the Rule Name in Checkpoint Firewall |

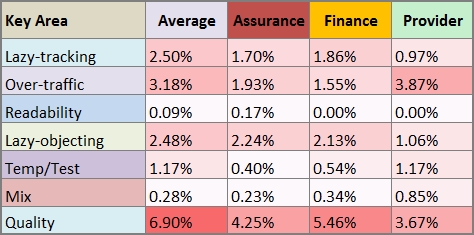

Key Area Indicators look a little bit deeper into the rulebase and are used to identify problem areas for an accurate control:

Representing the same data as the chart, we can quickly check if the rulebase is performing better or worse in its class.

For each Area, many controls are performed on each object. We calculate some Key Values Indicators that can be benchmarked across all analyzed rulebases and give us hints on the possible directions for an improvement:

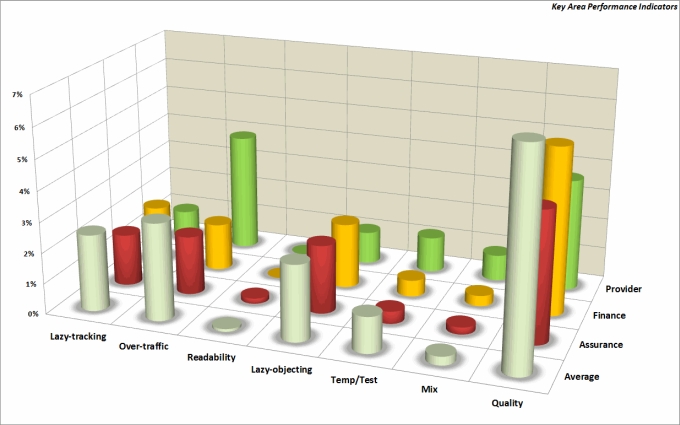

As a chart, we can quickly check which part of the rulebase is performing better or worse.

Maintain the same vulnerabilities classification over the years is the biggest challenge for this class of tools.

If the number of checks or the check itself is changed, the result may become not comparable and we would lose all the historical series (sometime was necessary to align old results with the new tools to make values consistent).

During the last decade of constant revisions, we gained a set of consistent checks. While the checking mechanism remains basically the same, the parameters are constantly updated with each revision: if during an analysis we encounter a new parameter that affects a control, it is coded so that it can be reused in subsequent versions (the most common example is new – sometimes fancy – comments to mark a rule as temporary).

This method reduces the need to (re-)normalize the data while the tool evolves.

Measuring objects with complex interdependencies is always a kind of alchemy and always produces a grainy representation of reality.

Once the process is tuned to (assuming the distorsion remains constant), a review of an even larger base of rules becomes not only possible but efficient and through the use of metrics it is possible to steer the improvements and measure them.

Thanks to the experience gained through years of revisions, after countless tests and improvements, we reached a set of checks that help us to measure and improve the quality and security of a rulebase.

Our experts will get in contact with you!

Rocco Gagliardi

Rocco Gagliardi

Rocco Gagliardi

Rocco Gagliardi

Our experts will get in contact with you!