Human and AI Art

Marisa Tschopp

Is Artificial Intelligence worth the Risk

Trust can be seen as a psychological mechanism to cope with uncertainty and is located somewhere between the known and the unknown (Botsman, 2017). On the individual level, trust is deeply ingrained in our personality. We are basically born with a tendency to trust or distrust people (or animals or any other things). Humans (and arguably animals as well) have the ability to tell in a snapshot if they trust a person or not. We look at the facial expression, body posture, or the context (background, surroundings, etc.). We compare it with memories or past experiences in split seconds, such as, I trust her, she reminds me of my grandmother.

Take for example this picture of this elderly women with glasses (on the left side of the picture). Do you trust her? Please rate on a scale from 1 (not at all) to 5 (totally).

Generally speaking, we tend to trust people more, who are more like ourselves (Zimbardo & Gerrig 2008). One reason is, that it is easier to predict future behavior or reactions of persons who are alike, which lowers the emotional risk of being hurt. What we do not know is, how accurate our intuition is. Did you trust this woman above? Maybe yes, because she is smiling and surrounded by children. Maybe no, because you are already expecting a trick here, as I (the author of this article) am a psychologist. If you ticked 1, your intuition was right. This woman is not very trustworthy. She died several years ago in prison as one of the most famous female serial killers.

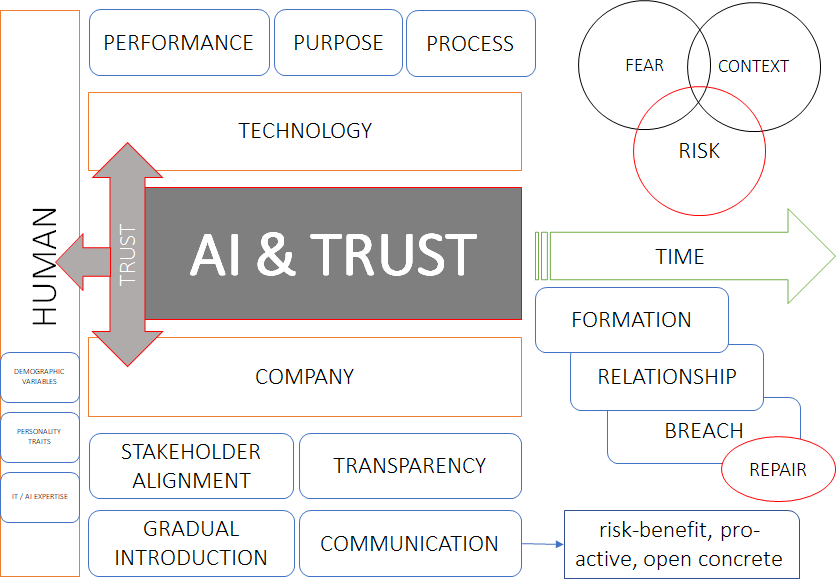

This first notion of trust is a fascinating area, yet, quite mystical, comparable to human consciousness: You know it is there, you can experience it, but there is no empirical evidence for it. However, trust is a multifaceted term and does not only comprise this initial part of trust formation. In the article On trust in AI, a detailed overview is given, on how to take a systemic approach towards trust in the AI context. It comprises trust as a characteristic trait of a human being, trust as a process between two or more (the trustor-trustee relationship) and looking at the process of trust on a timeline (context and factors from initial trust formation to a trust breach). To put it in a nutshell, questions and definitions can look at an individual, a relationship, a situation and/or a process.

Trust is indispensable to the prosperity and wellbeing of societies. For millennia, we developed trust building mechanisms to facilitate interactions. But as they become increasingly digital, many traditional mechanisms no longer function well, hence trust breaks down. As a result, low levels of trust discourage us from engaging in new forms of interactions and constrain business opportunities. (Track AI & Trust, from the AMLD 2019)

In the AI context, there are various reasons, why trust has become a very popular topic in research and practice. There is a lack of clear definition of processes, performance and especially the purpose of an AI, with respect to the intentions of its provider. Yet, if artificial intelligence offers great opportunities, trust seems to be the decisive factor whether we can make use of its full potential or not.

Research (academia, corporate and military) investigating the role of trust is on a high, the military being one of the great accelerators as automation and robots are already part of their daily lives as this offers life-saving opportunities: Automation and AI have the potential to save humans from dull, dangerous and dirty work (Hancock at al, 2011; Yagoda & Gillan, 2012). Trust seems to be the indispensable prerequisite, as in the term coined by Peter Hancock: No trust, no use. Our research department aims to investigate, even challenge, this no trust, no use relationship. The Titanium Trust Report is a mixed methods analysis, investigating three major topics:

The qualitative part explores further, what fears lay underneath the trust, as well as risk awareness and the role of the context. The following image gives an overview of the variables included in the study (based on Hengtler et al, 2016; Botsman, 2017 and Hancock et al, 2011):

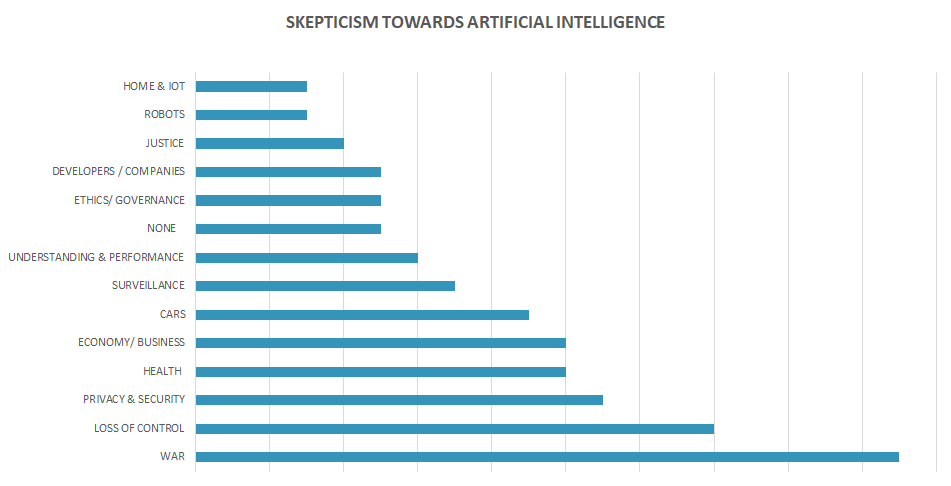

Results of the study will be published on the website incrementally, starting with the qualitative part. This present lab report focuses on the question: What areas or applications of AI are you most skeptical about?

Data was collected from March to June 2019 and 111 participants took part in the online survey. The sampling strategy aims to represent the opinions and attitudes of the general public. Participants are from all over the world with different professional backgrounds and varying knowledge about artificial intelligence. Gender variety is quite balanced, more women than men took part. Most participants have a bachelor’s degree or higher.

Participants were given short descriptions of the two terms artificial intelligence (based on Russel & Norvig, 2012, Stone at al, 2016) and trust (based on Botsman, 2017). The survey is structured in three parts:

Answers to the open question Are there specific applications or areas of use of AI you are most skeptical about? were coded by one coder (the author). 14 sub-categories were derived, which were then structured in three main categories. One category remained uncategorized for uninterpretable data such as any or sorry, not qualified to answer. One category only with three answers included participants expressing no scepsis towards AI. Categories were derived inductively based on the answers given (procedures according to Mayring 2010). Distinction and description of the categories were discussed with two colleagues, experts in information security, not trained in qualitative research.

The following tables show the category system derived from the qualitative data. The first main category is Impact on Society and Politics, which can be attributed to a macrolevel perspective. The second category contains technological factors, focusing on perceived characteristics of hard- and software. On the microlevel perspective, factors related to the impact on human life and behavior (focusing on the individual) can be found.

| Category | Description |

|---|---|

| Surveillance | Skeptical about using data, tools, and applications for monitoring people and behaviors |

| Security & Privacy | Skeptical about the loss of privacy, due to insufficient security |

| Legal & Justice | Skeptical about using AI in justice and law |

| Ethics & Governance | Skeptical about AI making the right (ethical) decision |

| War | Skeptical about use of AI in warfare, especially weapons |

| Category | Description |

|---|---|

| Robots | Skeptical about AI in robots, incl. fear of robots |

| Home & IoT | Skeptical about AI in devices used in everyday life |

| Cars | Skeptical about use of AI in transport, specifically autonomous driving |

| Developers & Vendors | Skeptical about the developers and respective companies, who develop AI |

| Understanding & Performance | Skeptical about the actual performance of AI and how it is understood in the general public |

| Category | Description |

|---|---|

| Healthcare | Skeptical about AI in healthcare and medicine |

| Economy & Business | Skeptical about the use of AI in business operations and impact on economy |

| Loss of Control | Fear of losing control over AI, including the emergence of AGI (artificial general intelligence) |

| Category | Description |

|---|---|

| Uncategorized | Uncategorized, uninterpretable answers |

| None | Not skeptical about AI |

Answers were often quite brief, containing only two to three words. For example, in the category war some indicated Military use makes me skeptical. Political use also concerns me whereas others only wrote autonomous weapons or military. The high number of participants provides an indication of the potency and weighting of a category and allows comparisons to be made without making statistically relevant conclusions.

War was clearly the most often named area participants are skeptical about, specifically the term autonomous weapons. In this case, both the scenario of uncontrollable killing machines is mentioned, as well as the fear of errors with fatal consequences due to poor technical performance.

The military is a very risky application since logic driven decisions without human limits, or the misclassification of targets has enormous and irrevocable consequences. (Participant Titanium Trust Report 2019)

Loss of Control is the second greatest concern. This category contains no specific area of application, like the above in warfare, nor a detailed focus, such as privacy (loss of data ownership or similar). This category is focusing on the general need for maintaining control over decision-making processes in whatever area. It is rather related to associations with artificial intelligence and general attitude towards AI and technology.

Risk of AI tools (such as robots) developing their own “mind” which has happened already at Google. (Participant Titanium Trust Report 2019)

These statements are mostly hypothetical What-if? scenarios rather than based on actual experiences, like this one below. These are not application areas desired by the developer (such as autonomous weapons or the use of data for personalized advertising, for example), but, above all, consequences that would have been unintentional.

Too much dependency on technology which can be a disaster (think of fully automated houses, a friend of mine recently got locked into his house during a power outage (Participant Titanium Trust Report 2019)

Privacy and Security integrate devices as well as a political stance, that somehow compromise the need for privacy due to insufficient security measures taken by the providers, intentionally or not. Here, the debate about the transparent citizen plays a role and the fear of manipulation and exploitation for economic or political reasons.

The most uncomfortable part is the unlimited access to environmental information. For instance, the mobile AI assistant is allowed to detect, analyze, and probably store the voice info; or, rumor has it that China-produced vacuum robot is capable to steal data from home wifi. This bidirectional environment-machine interaction is fascinating, but also leave us unprotected. (Participant Titanium Trust Report 2019)

This category was one where participants elaborated in more detail than in others, where only two or three words were given.

AI in its entirety makes us vulnerable – simply because of the methods used for data privacy. Data is not yours anymore and it can make you (eg make life easier) or break you (you have an identified genetic defect and no one will insure you or your family) (Participant Titanium Trust Report 2019)

Other prominent findings are that only two out of 111 have mentioned the fear of job loss or replacement (Category Economy & Business). Surveillance and Autonomous Driving were so often explicitly mentioned that an own category was derived, rather than assigning it to Loss of Control or Security. In addition, it is noticeable that the statements in the category Understanding & Performance were particularly elaborated.

I’m skeptical about the average person’s interpretation of AI. I am a mathematician— I can easily understand the algorithms and error analysis for the methods used to implement AI. I see it as a tool to aid us that we can pick apart and program, not as an amorphous entity that can read minds. But these technical details are difficult for non-specialists to understand and lead to a lot of false hype or false interpretations in the media. (Participant Titanium Trust Report 2019)

The question What are you most skeptical about?, yields some interesting results, which are not absolute, but indicators for further research and what to focus on in practice. From the data, it is clear, that there is still great skepticism, especially when it comes to data privacy and ownership and in high-risk situations, where human lives are at risk, like in warfare and autonomous driving. However, most of these fears seem rather hypothetical and not based on facts or knowledge, which is obvious on the one hand. On the other hand, one can fear something without knowing or understanding concretely what it is or if it is a justified fear. For example, a child is afraid of monsters. The fear is real, even if the monsters aren’t. How does a child stop fearing monsters? There are various ways to do so: Education is one, like learning that monsters do not exist based on evidence they believe in. The rationale that lays beneath here is, that the child is already suffering due to its fears. The fears expressed in this survey are rather future-oriented, only a few are based on actual experience and current fear. This does not necessarily mean the data is useless, actually, the opposite is the case. They express the needs and values of the participants. Recognizing this is fundamental to discuss at a strategic level how a problem can be solved, or in the case, how to create trust. To put it in a nutshell, the following needs are particularly important to the participants:

It’s not the AI [I am skeptical about]. It’s who is designing it and how it is being used behind the scene that deserves scrutiny and caution. (Participant Titanium Trust Report 2019)

Furthermore, participants have mentioned that it is not about the technology, but the people who are behind the technology. Are they trustworthy, capable or diligent enough? In other parts of the Titanium Trust Report this is reflected also in the question, whether they trust companies or the programmers, who develop the AI. In another question, we asked who the preferred partner is for developing an AI, which also reflects a certain reluctance towards the big Tech-companies: Only 20% indicated that they would choose one of the big Tech-Giants like Google or Microsoft to develop an AI application. An impressive majority would prefer a Higher Education Institution or non-profit organization to develop an AI.

I am also skeptical of companies who want to use or develop AI for their business. They often exaggerate the capabilities of AI in order to make money, which feeds back into the media and the average person’s misconkeptions. (Participant Titanium Trust Report 2019)

To put it in a nutshell, the participants fear:

111 participants is quite a high number for including qualitative methods. Yet, as the answers were often quite brief a useful analysis was feasible. Choosing a qualitative approach was a useful decision as the study aims to identify fields that were not obvious or fields that need further research. It was rather surprising, that weapons or AI in warfare has such a great impact, as this is rather an exotic area of research and practice and not very public. One reason for this awareness, could lie in the construction of the survey. In the survey, dual-use research was explicitly mentioned, and participants were explained that AI used in warfare is a problematic topic. This could have biased the outcome.

Although participants were quite heterogeneous regarding their background, the fact that a cybersecurity company is an initiator may have biased the answers with regards to the potency of the category privacy and security. The fact that elaboration in these answers are of higher depth indicates, that this is a topic of expertise, which may not represent the knowledge and awareness of the general public.

The topic of loss of control can be found in almost all answers. How this can be empirically analyzed and separated, and how it influences the other categories must urgently be addressed in further quantitative studies.

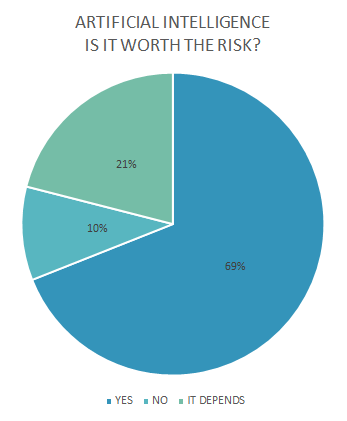

Participants in the survey are very aware of the risks that go along with the use and deployment of artificial intelligence. It seems to be legitimate to think about the question if it is worth the risk?

Yes but only if the nonsense around AI stops and people see it as a tool rather than a god-like force. (Participant Titanium Trust Report 2019)

Only 10% state that the risk is too high and efforts to further develop AI should be stopped. 69% are convinced that the advantages outweigh the risks. The quite interesting rest is either fatalistic in terms, that we do not have a choice anyway, or they indicate that it must come along with rules, regulations (comprising laws and ethics) as well as education.

This does make sense, as it is unrealistic to boycott AI per se. This may not be the right question to ask anyways. It is not so much if we use it, but how we use it. From the provider and user side, this needs constant questioning and critical thinking, without cynicism. From the public and societal perspective, this needs public discourse and, in some cases, clear regulations, now. There are a dedicated and growing number of people, who are devoted to this area like the European High-level Expert Group on AI or the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. A great example to engage and educate the public is the Science Year in Germany, an Initiative of the Federal Ministry of Education and Research. For one year they report and educate on this topic for the general public focusing on facts in an enjoyable, fresh manner.

This report has investigated the opinions of 111 participants with heterogeneous backgrounds, what areas or applications of artificial intelligence they are most skeptical about. The answers were evaluated, and categories were derived inductively. Based on the qualitative data participants needs integrate physical safety, partnership as well as self-responsibility and choice. The follow-up questions which arise in an applied context are about the practical applications: How to address the fears? What can be done to meet each other’s needs? Only if these questions are openly discussed, future generations can use AI as a tool (a mean to the end) to its full potential. Furthermore, maybe it is needed to take a step back and question the assumption of the trust-usage relationship. It seems logical, that users need to trust a technology and its provider to use it. But on the other hand, trust is given away in an inflational manner: Privacy policies are not read, and parents share pictures of their children on social media platforms known for data breaches. What other forces, other or next to trust, drive human-AI interaction?

This study wants to approach trust and AI from an interdisciplinary perspective, focusing on antecedents of trust and the trust-usage relationship. It shall contribute in three ways: First, we examine theories and knowledge about trust and AI across disciplines in academia as well as in practice. Hence this study aims to expand knowledge in applied psychology, human-machine-interaction, and technology management. Secondly, our results can be useful in various ways: For developers and from a management perspective. For academics in interdisciplinary applied fields, to generate new hypotheses. Last but not least, it is useful for the general public, having the opportunity to engage with this critical issue on a low threshold by taking part in this study. This research is conducted to raise key questions and answers about the meaning of trust in the context of artificial intelligence. It aims to trigger interdisciplinary exchange and conversation with the general public.

Our experts will get in contact with you!

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Our experts will get in contact with you!