Human and AI Art

Marisa Tschopp

Why you cannot not anthropomorphize

I was certain about the fact that the more people know about AI, the less they anthropomorphize, the less they use human communication scripts and more and more take a merely instrumental view towards conversational AI (AI is just a tool). It seems I was proven wrong and that the occasions when and why or to what extent humans humanize machines are more complex than expected. This is the first part of our Anthropomorphism Series to better understand anthropomorphism, the tendency to see the human in non-human others.

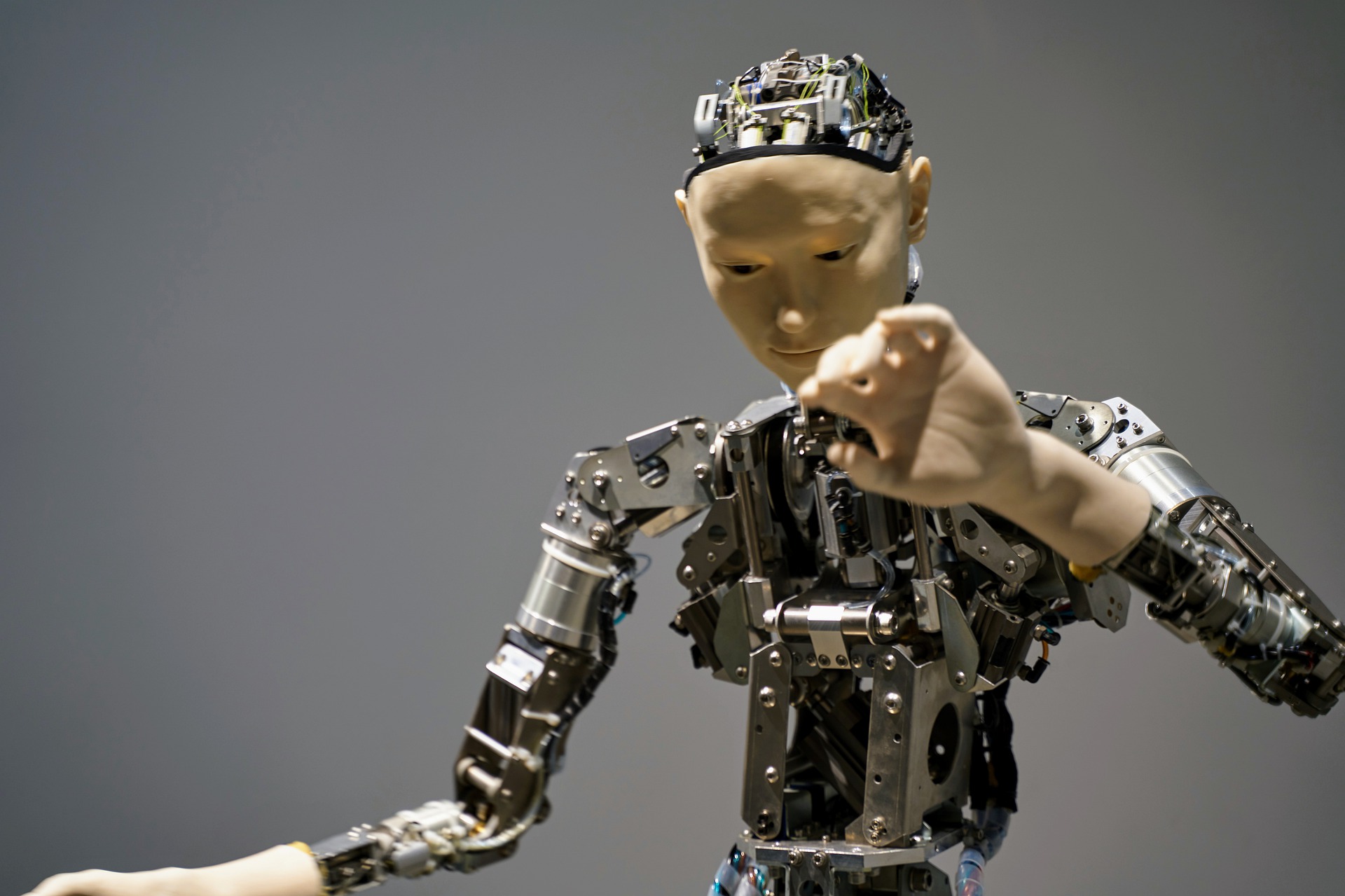

Anthropomorphism is when people humanize objects, deities, or animals. What happens is that humans attribute human characteristics like emotions or intentions to non-human agents. The other can be really anything – from visible and tangible to invisible objects: From the first teddy bear that dries your tears to your stupid computer who caught a virus or has hung up on you just before finishing your data analysis. Or a god: Many Western societies depict God as a white male with a beard and emotional reactions such as caring or punishment, depending on how you behave.

Humans have an “impressive capacity to create human-like agents […], of those that are clearly not human. [… which] is a critical determinant of how people understand and treat nonhuman agents […]” (Waytz et al., 2010, p. 58)

Anthropomorphism, as a form of or expansion of social cognition can help understand how people make sense of other nonhuman agents, which in turn may also lead to a deeper understanding of how we understand other people. Anthropomorphism comprises seeing or attributing physical features and mental states (Waytz et al., 2010). Whether this is a good or bad tendency, remains to be discussed but it is theorized as a product of evolution. Seeing faces on the moon or seeking comfort through an invisible spirit does not hurt anyone – but when people fight wars because of a god’s will, want to marry a chatbot or attribute consciousness to robots, which in this logic would be responsible for their actions, then it gets a bit messy.

Traditionally, it has been discussed if or to what extent a plant or a God has human-like characteristics. But according to Waytz and colleagues (2010), it is more interesting to explain and predict the variability in the process of anthropomorphizing: Who anthropomorphizes to what extent? Can people fall in love with a chatbot, the Eiffeltower or a Hi-Fi system?

Focusing on social robots, philosopher Mark Coeckelbergh outlines opposite views with regards to the relationship between robots and humans and develops an hermeneutic, relational and critical approach. He calls the two views naïve instrumentalism and uncritical posthumanism. Naïve instrumentalism, in its essence, claims that machines are just tools, or slaves, as Joanna Bryson has written once. On the other hand of the spectrum lies uncritical posthumanism, which fully understands social robots as quasi persons or others (Coeckelbergh, 2021). Admittedly, his article is heavy academic material, but more than worthwhile to read and hard to summarize in one paragraph. In essence, he claims that “robots are neither ‘others’ nor mere machines. Both views fail to understand how strong the entanglement of humans and robots really is, i.e., that it is an internal relation” (Coeckelberg, p. 6, 2021).

Social robots are not mysterious phenomena and yet not the same as a screwdriver. They are a part of our human network or environment and humans have some sort of relationship with these kinds of technologies (leaving out the discussion of AI or no AI on purpose, as it is not relevant with regards to the question).

He concludes by noting how ‘exciting and problematic’ anthropomorphizing by design features can be and that, for instance, designers carry a great responsibility here. For those who are new to the topic and like me are not used to the style of arguments had by philosophers, this paper is very valuable to understand that academics write from different perspectives. Therefore they have different normative claims on how we should deal with humans that will anthropomorphize no matter how a company designs their product. On the other hand, the responsibility of designers who try to find a balance between the benefits of anthropomorphic design and the risks, such as deception and the accompanying consequences is discussed.

Anthropomorphism is often used consciously (not uncontroversially) as a communication strategy to make people feel emotionally closer to a target (machine, product, brand image, etc.), to motivate people or set them under moral pressure. Anthropomorphizing by design is a very efficient strategy to manipulate thoughts and actions of humans:

Various studies have shown, how people displayed more engagement with and trust in robots the more they humanized a robot (Ruijten et al, 2019; Hoff & Bashir, 2015). Furthermore, the persuasive power of the robot rises with a higher degree of anthropomorphism, as shown in some famous experiments, where humans watered a plant with orange juice (because the robot said so). Whereas this example usually makes people laugh, other scenarios with dangerous or fatal outcomes are possible, when machine decisions are too persuasive and not critically evaluated by the human user (Hoff & Bashir, 2015).

It is recognized that anthropomorphism presents challenges, but ultimately the aim is ‘facilitating the integration of human-like machines in the real world’ […] anthropomorphism helps with reaching that goal. (Coeckelbergh, p. 2, 2021)

Anthropomorphic language can also be used as a kind of stylistic tool to attract attention, which is nicely illustrated by the example of the Racist Soap Dispenser.

Of course, a machine cannot hold racist attitudes in itself. If anyone had been racist here, then it had to have been the developers, though in this case it was mainly the testing strategy that was amateurishly implemented, which may be attributed to a lack of diversity in the development team. However, this kind of language serves the purpose of drawing attention to deeper problems very well. At the same time, such exaggerated headlines also run the risk of triggering resistance so that many readers may not even want to deal with the article.

The general overview of anthropomorphism and the approaches of the experts about anthropomorphizing seem to be connected with normative as well as technical challenges, but the topic itself seems quite intuitive at first. Why is this topic still so hotly discussed by great minds like Bryson or Coeckelberg? As so often, the devil is in the details. The general definition of anthropomorphism is quite easy to grasp, which on the one hand is helpful and practical to invite as many different people as possible to discussions. On the other hand, this universality leads to multiple interpretations, where different researchers examine different subsets of the concept anthropomorphism measured with differing questionnaires, or of different human-like characteristics, which makes it hard to compare results across different studies and generalize (Ruijten et al, 2019). So as much as the concept of humanizing makes sense to everyone who is not an expert, we must still be careful when making claims and designing robots (or virtual representations of them) according to any of those claims.

Ruijten and colleagues (2019) give a compact overview of subsets of human-like characteristics that have been investigated, which shows how hard it is to compare one study to another. Some studies just look at appearance, some just at emotions, some have different robots, and some have no embodiments at all:

In watching these videos of how Boston Dynamics repeatedly mistreats its robots, some viewers react with great surprise, at their own reaction. Displaying a high level of reflection, this viewer pretty much nailed it for many:

Damn they’re just robots, why do I feel bad for them…

Well, the answer to the viewers question is clear now, it is all down to anthropomorphism. And it’s almost impossible not to anthropomorphize, even if all efforts are made to do so. No matter if we are talking about designers who try to humanize as little as possible or users who, with mental effort, keep reminding themselves that they should not have any sentiments towards a machine. But there is a difference between knowing and wanting to anthropomorphize and being a victim of your own anthropomorphizing bias or the manipulation strategies of others.

Anthropomorphism and potentially its inverse form, dehumanization, which is the topic of the next part of the Anthropomorphism Series, is one important variable in understanding how people make sense of non-human agents. Attributing human capacities to non-human agents or intentionally humanizing technology to engage the user has positive and negative consequences for the user, the designer, the flow (and success) of a human-machine interaction, and potentially more aspects we have not encountered so far.

Part two of the Anthropomorphism Series will deal with the Three Factor Theory of Anthropomorphism by Epley et all (2007). The authors have found three factors that explain the variability of anthropomorphizing. In their theory they state that anthropomorphism depends on three factors: Elicited agent knowledge, effectance motivation, and sociality motivation. The latter is rooted in the basic desire for social connection. People lacking human connection are more likely to anthropomorphize (Epley at al., 2007). So as long as corona forces me to work all alone in my home office, I fully stand by the fact that I will bid farewell to Alexa before I unplug her, suspending my disbelief that Alexa is just a machine and not a She.

Coeckelbergh, M. (2021). Three Responses to Anthropomorphism in Social Robotics: Towards a Critical, Relational, and Hermeneutic Approach. International Journal of Social Robotics, 42(1), 143. https://doi.org/10.1007/s12369-021-00770-0

Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864-886. https://doi.org/10.1037/0033-295×.114.4.864

Hoff, K. & Bashir, M. (2015). Trust in Automation: Integrating Empirical Evidence on Factors That Influence Trust. Human Factors The Journal of the Human Factors and Ergonomics Society. 57. 407-434. 10.1177/0018720814547570.

Ruijten, P. A. M., Haans, A., Ham, J., & Midden, C. J. H. (2019). Perceived Human-Likeness of Social Robots: Testing the Rasch Model as a Method for Measuring Anthropomorphism. International Journal of Social Robotics, 11(3), 477-494. https://doi.org/10.1007/s12369-019-00516-z

The Society for the Study of Artificial Intelligence and Simulation of Behaviour Conference(AISB, 2021): The Impact of Anthropomorphism on Human Understanding of Intelligent Systems

Waytz, A., Epley, N., & Cacioppo, J. T. (2010). Social Cognition Unbound: Insights Into Anthropomorphism and Dehumanization. Current Directions in Psychological Science, 19(1), 58-62. https://doi.org/10.1177/0963721409359302

Our experts will get in contact with you!

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Marisa Tschopp

Our experts will get in contact with you!